Introduction

Vulkan is known for being explicit and verbose. But the required verbosity has steadily reduced with each successive version, its new features, and previous extensions being absorbed into the core API. Similarly, RAII has been a pillar of C++ since its inception, yet most existing Vulkan tutorials do not utilize it, instead choosing to "extend" the explicitness by manually cleaning up resources.

To fill that gap, this guide has the following goals:

- Leverage modern C++, VulkanHPP, and Vulkan 1.3 features

- Focus on keeping it simple and straightforward, not on performance

- Develop a basic but dynamic rendering foundation

To reiterate, the focus is not on performance, it is on a quick introduction to the current standard multi-platform graphics API while utilizing the modern paradigms and tools (at the time of writing). Even disregarding potential performance gains, Vulkan has a better and more modern design and ecosystem than OpenGL, eg: there is no global state machine, parameters are passed by filling structs with meaningful member variable names, multi-threading is largely trivial (yes, it is actually easier to do on Vulkan than OpenGL), there are a comprehensive set of validation layers to catch misuse which can be enabled without any changes to application code, etc.

For an in-depth Vulkan guide, the official tutorial is recommended. vkguide and the original Vulkan Tutorial are also very popular and intensely detailed.

Target Audience

The guide is for you if you:

- Understand the principles of modern C++ and its usage

- Have created C++ projects using third-party libraries

- Are somewhat familiar with graphics

- Having done OpenGL tutorials would be ideal

- Experience with frameworks like SFML / SDL is great

- Don't mind if all the information you need isn't monolithically in one place (ie, this guide)

Some examples of what this guide does not focus on:

- GPU-driven rendering

- Real-time graphics from ground-up

- Considerations for tiled GPUs (eg mobile devices / Android)

Source

The source code for the project (as well as this guide) is located in this repository. A section/* branch intends to reflect the state of the code at the end of a particular section of the guide. Bugfixes / changes are generally backported, but there may be some divergence from the current state of the code (ie, in main). The source of the guide itself is only up-to-date on main, changes are not backported.

Getting Started

Vulkan is platform agnostic, which is one of the main reasons for its verbosity: it has to account for a wide range of implementations in its API. We shall be constraining our approach to Windows and Linux (x64 or aarch64), and focusing on discrete GPUs, enabing us to sidestep quite a bit of that verbosity. Vulkan 1.3 is widely supported by the target desktop platforms and reasonably recent graphics cards.

This doesn't mean that eg an integrated graphics chip will not be supported, it will just not be particularly designed/optimized for.

Technical Requirements

- Vulkan 1.3+ capable GPU and loader

- Vulkan 1.3+ SDK

- This is required for validation layers, a critical component/tool to use when developing Vulkan applications. The project itself does not use the SDK.

- Always using the latest SDK is recommended (1.4.x at the time of writing).

- Desktop operating system that natively supports Vulkan

- Windows and/or Linux (distros that use repos with recent packages) is recommended.

- MacOS does not natively support Vulkan. It can be used through MoltenVk, but at the time of writing MoltenVk does not fully support Vulkan 1.3, so if you decide to take this route, you may face some roadblocks.

- C++23 compiler and standard library

- GCC14+, Clang18+, and/or latest MSVC are recommended. MinGW/MSYS is not recommended.

- Using C++20 with replacements for C++23 specific features is possible. Eg replace

std::print()withfmt::print(), add()to lambdas, etc.

- CMake 3.24+

Overview

While support for C++ modules is steadily growing, tooling is not yet ready on all platforms/IDEs we want to target, so we will unfortuntely still be using headers. This might change in the near future, followed by a refactor of this guide.

The project uses a "Build the World" approach, enabling usage of sanitizers, reproducible builds on any supported platform, and requiring minimum pre-installed things on target machines. Feel free to use pre-built binaries instead, it doesn't change anything about how you would use Vulkan.

Dependencies

- GLFW for windowing, input, and Surface creation

- VulkanHPP (via Vulkan-Headers) for interacting with Vulkan

- While Vulkan is a C API, it offers an official C++ wrapper library with many quality-of-life features. This guide almost exclusively uses that, except at the boundaries of other C libraries that themselves use the C API (eg GLFW and VMA).

- Vulkan Memory Allocator for dealing with Vulkan memory heaps

- GLM for GLSL-like linear algebra in C++

- Dear ImGui for UI

Project Layout

This page describes the layout used by the code in this guide. Everything here is just an opinionated option used by the guide, and is not related to Vulkan usage.

External dependencies are stuffed into a zip file that's decompressed by CMake during the configure stage. Using FetchContent is a viable alternative.

Ninja Multi-Config is the assumed generator used, regardless of OS/compiler. This is set up in a CMakePresets.json file in the project root. Additional custom presets can be added via CMakeUserPresets.json.

On Windows, Visual Studio CMake Mode uses this generator and automatically loads presets. With Visual Studio Code, the CMake Tools extension automatically uses presets. For other IDEs, refer to their documentation on using CMake presets.

Filesystem

.

|-- CMakeLists.txt <== executable target

|-- CMakePresets.json

|-- [other project files]

|-- ext/

│ |-- CMakeLists.txt <== external dependencies target

|-- src/

|-- [sources and headers]

Validation Layers

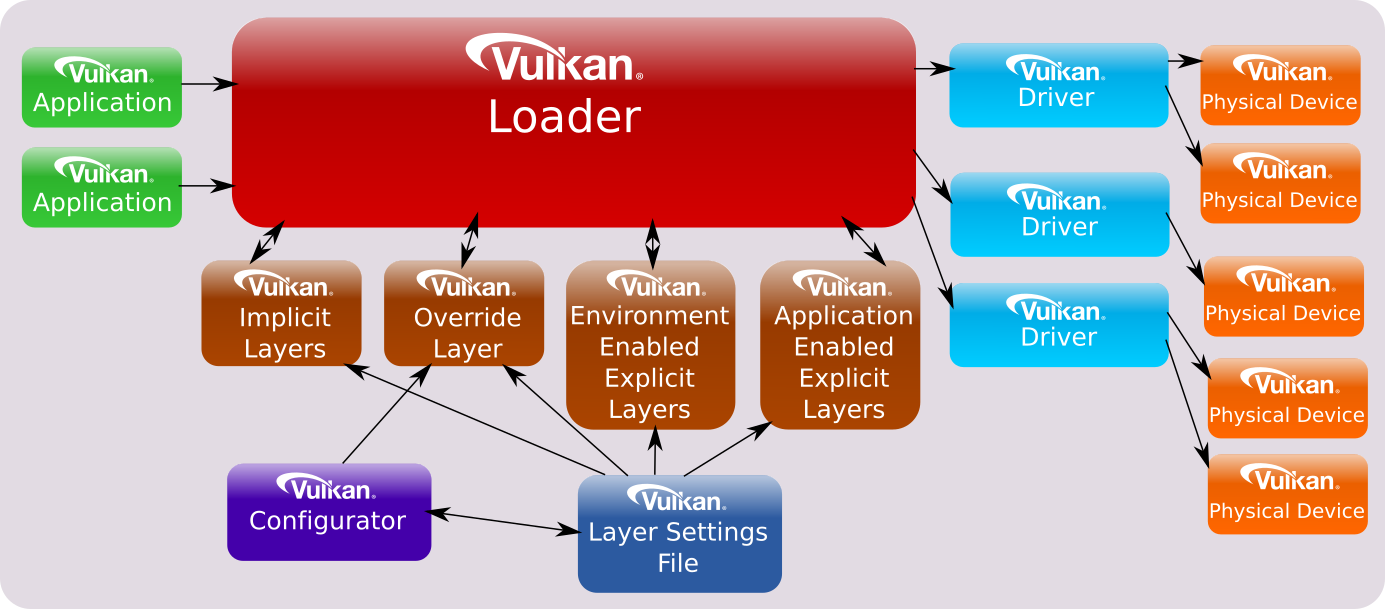

The area of Vulkan that apps interact with: the loader, is very powerful and flexible. Read more about it here. Its design enables it to chain API calls through configurable layers, eg for overlays, and most importantly for us: Validation Layers.

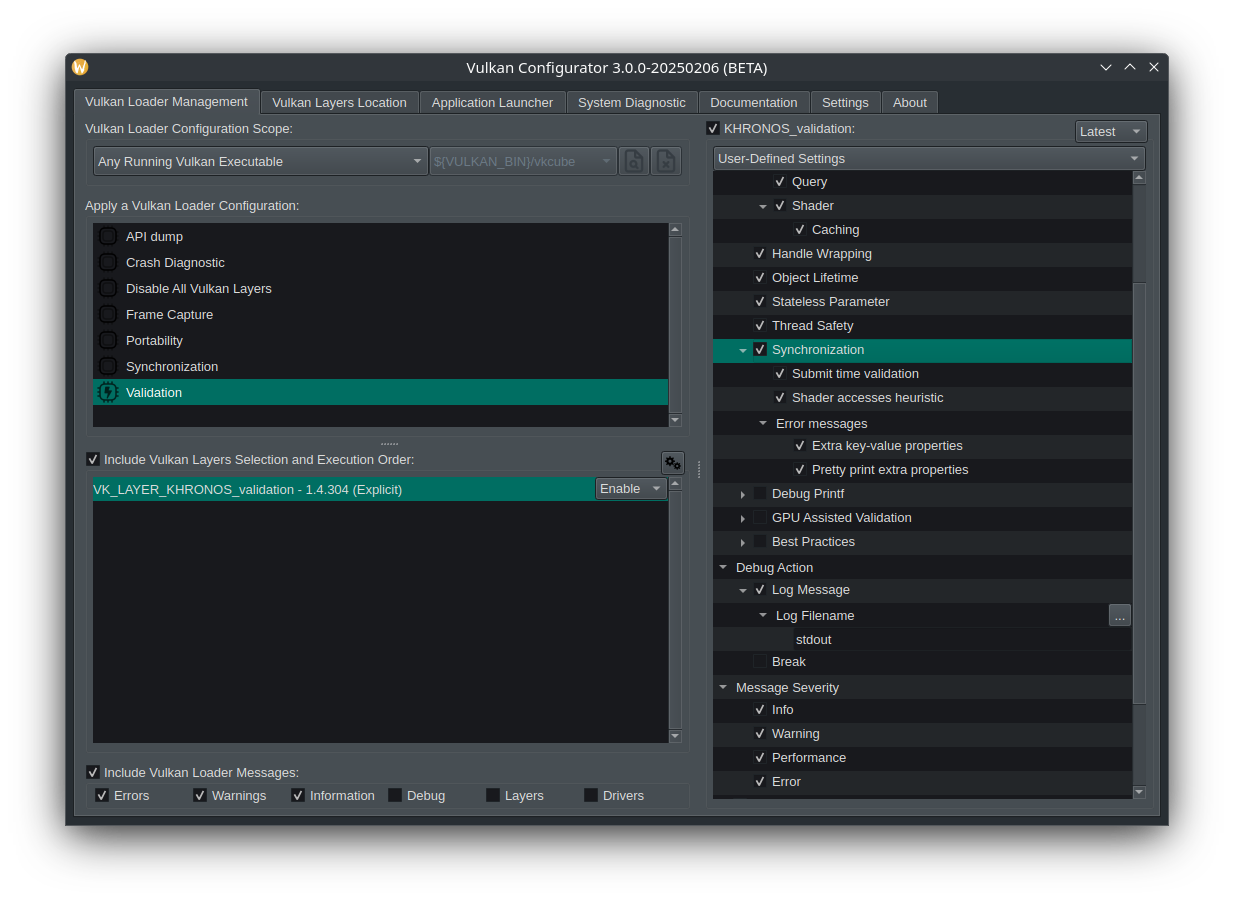

As suggested by the Khronos Group, the guide strongly recommends using Vulkan Configurator (GUI) for validation layers. It is included in the Vulkan SDK, just keep it running while developing Vulkan applications, and ensure it is setup to inject validation layers into all detected applications, with Synchronization Validation enabled. This approach provides a lot of flexibility at runtime, including the ability to have VkConfig break the debugger on encountering an error, and also eliminates the need for validation layer specific code in the applications.

Note: modify your development (or desktop) environment's

PATH(or useLD_LIBRARY_PATHon supported systems) to make sure the SDK's binaries (shared libraries) are visible first.

Application

class App will serve as the owner and driver of the entire application. While there will only be one instance, using a class enables us to leverage RAII and destroy all its resources automatically and in the correct order, and avoids the need for globals.

// app.hpp

namespace lvk {

class App {

public:

void run();

};

} // namespace lvk

// app.cpp

namespace lvk {

void App::run() {

// TODO

}

} // namespace lvk

Main

main.cpp will not do much: it's mainly responsible for transferring control to the actual entry point, and catching fatal exceptions.

// main.cpp

auto main() -> int {

try {

lvk::App{}.run();

} catch (std::exception const& e) {

std::println(stderr, "PANIC: {}", e.what());

return EXIT_FAILURE;

} catch (...) {

std::println("PANIC!");

return EXIT_FAILURE;

}

}

Initialization

This section deals with initialization of all the systems needed, including:

- Initializing GLFW and creating a Window

- Creating a Vulkan Instance

- Creating a Vulkan Surface

- Selecting a Vulkan Physical Device

- Creating a Vulkan logical Device

- Creating a Vulkan Swapchain

If any step here fails, it is a fatal error as we can't do anything meaningful beyond that point.

GLFW Window

We will use GLFW (3.4) for windowing and related events. The library - like all external dependencies - is configured and added to the build tree in ext/CMakeLists.txt. GLFW_INCLUDE_VULKAN is defined for all consumers, to enable GLFW's Vulkan related functions (known as Window System Integration (WSI)). GLFW 3.4 supports Wayland on Linux, and by default it builds backends for both X11 and Wayland. For this reason it will need the development packages for both platforms (and some other Wayland/CMake dependencies) to configure/build successfully. A particular backend can be requested at runtime if desired via GLFW_PLATFORM.

Although it is quite feasible to have multiple windows in a Vulkan-GLFW application, that is out of scope for this guide. For our purposes GLFW (the library) and a single window are a monolithic unit - initialized and destroyed together. This can be encapsulated in a std::unique_ptr with a custom deleter, especially since GLFW returns an opaque pointer (GLFWwindow*).

// window.hpp

namespace lvk::glfw {

struct Deleter {

void operator()(GLFWwindow* window) const noexcept;

};

using Window = std::unique_ptr<GLFWwindow, Deleter>;

// Returns a valid Window if successful, else throws.

[[nodiscard]] auto create_window(glm::ivec2 size, char const* title) -> Window;

} // namespace lvk::glfw

// window.cpp

void Deleter::operator()(GLFWwindow* window) const noexcept {

glfwDestroyWindow(window);

glfwTerminate();

}

GLFW can create fullscreen and borderless windows, but we will stick to a standard window with decorations. Since we cannot do anything useful if we are unable to create a window, all other branches throw a fatal exception (caught in main).

auto glfw::create_window(glm::ivec2 const size, char const* title) -> Window {

static auto const on_error = [](int const code, char const* description) {

std::println(stderr, "[GLFW] Error {}: {}", code, description);

};

glfwSetErrorCallback(on_error);

if (glfwInit() != GLFW_TRUE) {

throw std::runtime_error{"Failed to initialize GLFW"};

}

// check for Vulkan support.

if (glfwVulkanSupported() != GLFW_TRUE) {

throw std::runtime_error{"Vulkan not supported"};

}

auto ret = Window{};

// tell GLFW that we don't want an OpenGL context.

glfwWindowHint(GLFW_CLIENT_API, GLFW_NO_API);

ret.reset(glfwCreateWindow(size.x, size.y, title, nullptr, nullptr));

if (!ret) { throw std::runtime_error{"Failed to create GLFW Window"}; }

return ret;

}

App can now store a glfw::Window and keep polling it in run() until it gets closed by the user. We will not be able to draw anything to the window for a while, but this is the first step in that journey.

Declare it as a private member:

private:

glfw::Window m_window{};

Add some private member functions to encapsulate each operation:

void create_window();

void main_loop();

Implement them and call them in run():

void App::run() {

create_window();

main_loop();

}

void App::create_window() {

m_window = glfw::create_window({1280, 720}, "Learn Vulkan");

}

void App::main_loop() {

while (glfwWindowShouldClose(m_window.get()) == GLFW_FALSE) {

glfwPollEvents();

}

}

On Wayland you will not even see a window yet: it is only shown after the application presents a framebuffer to it.

Vulkan Instance

Instead of linking to Vulkan (via the SDK) at build-time, we will load Vulkan at runtime. This requires a few adjustments:

- In the CMake ext target

VK_NO_PROTOTYPESis defined, which turns API function declarations into function pointers - In

app.cppthis line is added to the global scope:VULKAN_HPP_DEFAULT_DISPATCH_LOADER_DYNAMIC_STORAGE - Before and during initialization

VULKAN_HPP_DEFAULT_DISPATCHER.init()is called

The first thing to do in Vulkan is to create an Instance, which will enable enumeration of physical devices (GPUs) and creation of a logical device.

Since we require Vulkan 1.3, store that in a constant to be easily referenced:

namespace {

constexpr auto vk_version_v = VK_MAKE_VERSION(1, 3, 0);

} // namespace

In App, create a new member function create_instance() and call it after create_window() in run(). After initializing the dispatcher, check that the loader meets the version requirement:

void App::create_instance() {

// initialize the dispatcher without any arguments.

VULKAN_HPP_DEFAULT_DISPATCHER.init();

auto const loader_version = vk::enumerateInstanceVersion();

if (loader_version < vk_version_v) {

throw std::runtime_error{"Loader does not support Vulkan 1.3"};

}

}

We will need the WSI instance extensions, which GLFW conveniently provides for us. Add a helper function in window.hpp/cpp:

auto glfw::instance_extensions() -> std::span<char const* const> {

auto count = std::uint32_t{};

auto const* extensions = glfwGetRequiredInstanceExtensions(&count);

return {extensions, static_cast<std::size_t>(count)};

}

Continuing with instance creation, create a vk::ApplicationInfo object and fill it up:

auto app_info = vk::ApplicationInfo{};

app_info.setPApplicationName("Learn Vulkan").setApiVersion(vk_version_v);

Create a vk::InstanceCreateInfo object and fill it up:

auto instance_ci = vk::InstanceCreateInfo{};

// need WSI instance extensions here (platform-specific Swapchains).

auto const extensions = glfw::instance_extensions();

instance_ci.setPApplicationInfo(&app_info).setPEnabledExtensionNames(

extensions);

Add a vk::UniqueInstance member after m_window: this must be destroyed before terminating GLFW. Create it, and initialize the dispatcher against it:

glfw::Window m_window{};

vk::UniqueInstance m_instance{};

// ...

// initialize the dispatcher against the created Instance.

m_instance = vk::createInstanceUnique(instance_ci);

VULKAN_HPP_DEFAULT_DISPATCHER.init(*m_instance);

Make sure VkConfig is running with validation layers enabled, and debug/run the app. If "Information" level loader messages are enabled, you should see quite a bit of console output at this point: information about layers being loaded, physical devices and their ICDs being enumerated, etc.

If this line or equivalent is not visible in the logs, re-check your Vulkan Configurator setup and PATH:

INFO | LAYER: Insert instance layer "VK_LAYER_KHRONOS_validation"

For instance, if libVkLayer_khronos_validation.so / VkLayer_khronos_validation.dll is not visible to the app / loader, you'll see a line similar to:

INFO | LAYER: Requested layer "VK_LAYER_KHRONOS_validation" failed to load.

Congratulations, you have successfully initialized a Vulkan Instance!

Wayland users: seeing the window is still a long way off, these VkConfig/validation logs are your only feedback for now.

Vulkan Surface

Being platform agnostic, Vulkan interfaces with the WSI via the VK_KHR_surface extension. A Surface enables displaying images on the window through the presentation engine.

Add another helper function in window.hpp/cpp:

auto glfw::create_surface(GLFWwindow* window, vk::Instance const instance)

-> vk::UniqueSurfaceKHR {

VkSurfaceKHR ret{};

auto const result =

glfwCreateWindowSurface(instance, window, nullptr, &ret);

if (result != VK_SUCCESS || ret == VkSurfaceKHR{}) {

throw std::runtime_error{"Failed to create Vulkan Surface"};

}

return vk::UniqueSurfaceKHR{ret, instance};

}

Add a vk::UniqueSurfaceKHR member to App after m_instance, and create the surface:

void App::create_surface() {

m_surface = glfw::create_surface(m_window.get(), *m_instance);

}

Vulkan Physical Device

A Physical Device represents a single complete implementation of Vulkan, for our intents and purposes a single GPU. (It could also be eg a software renderer like Mesa/lavapipe.) Some machines may have multiple Physical Devices available, like laptops with dual-GPUs. We need to select the one we want to use, given our constraints:

- Vulkan 1.3 must be supported

- Vulkan Swapchains must be supported

- A Vulkan Queue that supports Graphics and Transfer operations must be available

- It must be able to present to the previously created Vulkan Surface

- (Optional) Prefer discrete GPUs

We wrap the actual Physical Device and a few other useful objects into struct Gpu. Since it will be accompanied by a hefty utility function, we put it in its own hpp/cpp files, and move the vk_version_v constant to this new header:

constexpr auto vk_version_v = VK_MAKE_VERSION(1, 3, 0);

struct Gpu {

vk::PhysicalDevice device{};

vk::PhysicalDeviceProperties properties{};

vk::PhysicalDeviceFeatures features{};

std::uint32_t queue_family{};

};

[[nodiscard]] auto get_suitable_gpu(vk::Instance instance,

vk::SurfaceKHR surface) -> Gpu;

The implementation:

auto lvk::get_suitable_gpu(vk::Instance const instance,

vk::SurfaceKHR const surface) -> Gpu {

auto const supports_swapchain = [](Gpu const& gpu) {

static constexpr std::string_view name_v =

VK_KHR_SWAPCHAIN_EXTENSION_NAME;

static constexpr auto is_swapchain =

[](vk::ExtensionProperties const& properties) {

return properties.extensionName.data() == name_v;

};

auto const properties = gpu.device.enumerateDeviceExtensionProperties();

auto const it = std::ranges::find_if(properties, is_swapchain);

return it != properties.end();

};

auto const set_queue_family = [](Gpu& out_gpu) {

static constexpr auto queue_flags_v =

vk::QueueFlagBits::eGraphics | vk::QueueFlagBits::eTransfer;

for (auto const [index, family] :

std::views::enumerate(out_gpu.device.getQueueFamilyProperties())) {

if ((family.queueFlags & queue_flags_v) == queue_flags_v) {

out_gpu.queue_family = static_cast<std::uint32_t>(index);

return true;

}

}

return false;

};

auto const can_present = [surface](Gpu const& gpu) {

return gpu.device.getSurfaceSupportKHR(gpu.queue_family, surface) ==

vk::True;

};

auto fallback = Gpu{};

for (auto const& device : instance.enumeratePhysicalDevices()) {

auto gpu = Gpu{.device = device, .properties = device.getProperties()};

if (gpu.properties.apiVersion < vk_version_v) { continue; }

if (!supports_swapchain(gpu)) { continue; }

if (!set_queue_family(gpu)) { continue; }

if (!can_present(gpu)) { continue; }

gpu.features = gpu.device.getFeatures();

if (gpu.properties.deviceType == vk::PhysicalDeviceType::eDiscreteGpu) {

return gpu;

}

// keep iterating in case we find a Discrete Gpu later.

fallback = gpu;

}

if (fallback.device) { return fallback; }

throw std::runtime_error{"No suitable Vulkan Physical Devices"};

}

Finally, add a Gpu member in App and initialize it after create_surface():

create_surface();

select_gpu();

// ...

void App::select_gpu() {

m_gpu = get_suitable_gpu(*m_instance, *m_surface);

std::println("Using GPU: {}",

std::string_view{m_gpu.properties.deviceName});

}

Vulkan Device

A Vulkan Device is a logical instance of a Physical Device, and will the primary interface for everything Vulkan now onwards. Vulkan Queues are owned by the Device, we will need one from the queue family stored in the Gpu to submit recorded command buffers. We also need to explicitly declare all features we want to use, eg Dynamic Rendering and Synchronization2.

Setup a vk::QueueCreateInfo object:

auto queue_ci = vk::DeviceQueueCreateInfo{};

// since we use only one queue, it has the entire priority range, ie, 1.0

static constexpr auto queue_priorities_v = std::array{1.0f};

queue_ci.setQueueFamilyIndex(m_gpu.queue_family)

.setQueueCount(1)

.setQueuePriorities(queue_priorities_v);

Setup the core device features:

// nice-to-have optional core features, enable if GPU supports them.

auto enabled_features = vk::PhysicalDeviceFeatures{};

enabled_features.fillModeNonSolid = m_gpu.features.fillModeNonSolid;

enabled_features.wideLines = m_gpu.features.wideLines;

enabled_features.samplerAnisotropy = m_gpu.features.samplerAnisotropy;

enabled_features.sampleRateShading = m_gpu.features.sampleRateShading;

Setup the additional features, using setPNext() to chain them:

// extra features that need to be explicitly enabled.

auto sync_feature = vk::PhysicalDeviceSynchronization2Features{vk::True};

auto dynamic_rendering_feature =

vk::PhysicalDeviceDynamicRenderingFeatures{vk::True};

// sync_feature.pNext => dynamic_rendering_feature,

// and later device_ci.pNext => sync_feature.

// this is 'pNext chaining'.

sync_feature.setPNext(&dynamic_rendering_feature);

Setup a vk::DeviceCreateInfo object:

auto device_ci = vk::DeviceCreateInfo{};

// we only need one device extension: Swapchain.

static constexpr auto extensions_v =

std::array{VK_KHR_SWAPCHAIN_EXTENSION_NAME};

device_ci.setPEnabledExtensionNames(extensions_v)

.setQueueCreateInfos(queue_ci)

.setPEnabledFeatures(&enabled_features)

.setPNext(&sync_feature);

Declare a vk::UniqueDevice member after m_gpu, create it, and initialize the dispatcher against it:

m_device = m_gpu.device.createDeviceUnique(device_ci);

// initialize the dispatcher against the created Device.

VULKAN_HPP_DEFAULT_DISPATCHER.init(*m_device);

Declare a vk::Queue member (order doesn't matter since it's just a handle, the actual Queue is owned by the Device) and initialize it:

static constexpr std::uint32_t queue_index_v{0};

m_queue = m_device->getQueue(m_gpu.queue_family, queue_index_v);

Scoped Waiter

A useful abstraction to have is an object that in its destructor waits/blocks until the Device is idle. It is incorrect usage to destroy Vulkan objects while they are in use by the GPU, such an object helps with making sure the device is idle before some dependent resource gets destroyed.

Being able to do arbitary things on scope exit will be useful in other spots too, so we encapsulate that in a basic class template Scoped. It's somewhat like a unique_ptr<Type, Deleter> that stores the value (Type) instead of a pointer (Type*), with some constraints:

Typemust be default constructible- Assumes a default constructed

Typeis equivalent to null (does not callDeleter)

template <typename Type>

concept Scopeable =

std::equality_comparable<Type> && std::is_default_constructible_v<Type>;

template <Scopeable Type, typename Deleter>

class Scoped {

public:

Scoped(Scoped const&) = delete;

auto operator=(Scoped const&) = delete;

Scoped() = default;

constexpr Scoped(Scoped&& rhs) noexcept

: m_t(std::exchange(rhs.m_t, Type{})) {}

constexpr auto operator=(Scoped&& rhs) noexcept -> Scoped& {

if (&rhs != this) { std::swap(m_t, rhs.m_t); }

return *this;

}

explicit(false) constexpr Scoped(Type t) : m_t(std::move(t)) {}

constexpr ~Scoped() {

if (m_t == Type{}) { return; }

Deleter{}(m_t);

}

[[nodiscard]] constexpr auto get() const -> Type const& { return m_t; }

[[nodiscard]] constexpr auto get() -> Type& { return m_t; }

private:

Type m_t{};

};

Don't worry if this doesn't make a lot of sense: the implementation isn't important, what it does and how to use it is what matters.

A ScopedWaiter can now be implemented quite easily:

struct ScopedWaiterDeleter {

void operator()(vk::Device const device) const noexcept {

device.waitIdle();

}

};

using ScopedWaiter = Scoped<vk::Device, ScopedWaiterDeleter>;

Add a ScopedWaiter member to App at the end of its member list: this must remain at the end to be the first member that gets destroyed, thus guaranteeing the device will be idle before the destruction of any other members begins. Initialize it after creating the Device:

m_waiter = *m_device;

Swapchain

A Vulkan Swapchain is an array of presentable images associated with a Surface, which acts as a bridge between the application and the platform's presentation engine (compositor / display engine). The Swapchain will be continually used in the main loop to acquire and present images. Since failing to create a Swapchain is a fatal error, its creation is part of the initialization section.

We shall wrap the Vulkan Swapchain into our own class Swapchain. It will also store the a copy of the Images owned by the Vulkan Swapchain, and create (and own) Image Views for each Image. The Vulkan Swapchain may need to be recreated in the main loop, eg when the framebuffer size changes, or an acquire/present operation returns vk::ErrorOutOfDateKHR. This will be encapsulated in a recreate() function which can simply be called during initialization as well.

// swapchain.hpp

class Swapchain {

public:

explicit Swapchain(vk::Device device, Gpu const& gpu,

vk::SurfaceKHR surface, glm::ivec2 size);

auto recreate(glm::ivec2 size) -> bool;

[[nodiscard]] auto get_size() const -> glm::ivec2 {

return {m_ci.imageExtent.width, m_ci.imageExtent.height};

}

private:

void populate_images();

void create_image_views();

vk::Device m_device{};

Gpu m_gpu{};

vk::SwapchainCreateInfoKHR m_ci{};

vk::UniqueSwapchainKHR m_swapchain{};

std::vector<vk::Image> m_images{};

std::vector<vk::UniqueImageView> m_image_views{};

};

// swapchain.cpp

Swapchain::Swapchain(vk::Device const device, Gpu const& gpu,

vk::SurfaceKHR const surface, glm::ivec2 const size)

: m_device(device), m_gpu(gpu) {}

Static Swapchain Properties

Some Swapchain creation parameters like the image extent (size) and count depend on the surface capabilities, which can change during runtime. We can setup the rest in the constructor, for which we need a helper function to obtain a desired Surface Format:

constexpr auto srgb_formats_v = std::array{

vk::Format::eR8G8B8A8Srgb,

vk::Format::eB8G8R8A8Srgb,

};

// returns a SurfaceFormat with SrgbNonLinear color space and an sRGB format.

[[nodiscard]] constexpr auto

get_surface_format(std::span<vk::SurfaceFormatKHR const> supported)

-> vk::SurfaceFormatKHR {

for (auto const desired : srgb_formats_v) {

auto const is_match = [desired](vk::SurfaceFormatKHR const& in) {

return in.format == desired &&

in.colorSpace ==

vk::ColorSpaceKHR::eVkColorspaceSrgbNonlinear;

};

auto const it = std::ranges::find_if(supported, is_match);

if (it == supported.end()) { continue; }

return *it;

}

return supported.front();

}

An sRGB format is preferred because that is what the screen's color space is in. This is indicated by the fact that the only core Color Space is vk::ColorSpaceKHR::eVkColorspaceSrgbNonlinear, which specifies support for the images in sRGB color space.

The constructor can now be implemented:

auto const surface_format =

get_surface_format(m_gpu.device.getSurfaceFormatsKHR(surface));

m_ci.setSurface(surface)

.setImageFormat(surface_format.format)

.setImageColorSpace(surface_format.colorSpace)

.setImageArrayLayers(1)

// Swapchain images will be used as color attachments (render targets).

.setImageUsage(vk::ImageUsageFlagBits::eColorAttachment)

// eFifo is guaranteed to be supported.

.setPresentMode(vk::PresentModeKHR::eFifo);

if (!recreate(size)) {

throw std::runtime_error{"Failed to create Vulkan Swapchain"};

}

Swapchain Recreation

The constraints on Swapchain creation parameters are specified by Surface Capabilities. Based on the spec we add two helper functions and a constant:

constexpr std::uint32_t min_images_v{3};

// returns currentExtent if specified, else clamped size.

[[nodiscard]] constexpr auto

get_image_extent(vk::SurfaceCapabilitiesKHR const& capabilities,

glm::uvec2 const size) -> vk::Extent2D {

constexpr auto limitless_v = 0xffffffff;

if (capabilities.currentExtent.width < limitless_v &&

capabilities.currentExtent.height < limitless_v) {

return capabilities.currentExtent;

}

auto const x = std::clamp(size.x, capabilities.minImageExtent.width,

capabilities.maxImageExtent.width);

auto const y = std::clamp(size.y, capabilities.minImageExtent.height,

capabilities.maxImageExtent.height);

return vk::Extent2D{x, y};

}

[[nodiscard]] constexpr auto

get_image_count(vk::SurfaceCapabilitiesKHR const& capabilities)

-> std::uint32_t {

if (capabilities.maxImageCount < capabilities.minImageCount) {

return std::max(min_images_v, capabilities.minImageCount);

}

return std::clamp(min_images_v, capabilities.minImageCount,

capabilities.maxImageCount);

}

We want at least three images in order to be have the option to set up triple buffering. While it's possible for a Surface to have maxImageCount < 3, it is quite unlikely. It is in fact much more likely for minImageCount > 3.

The dimensions of Vulkan Images must be positive, so if the incoming framebuffer size is not, we skip the attempt to recreate. This can happen eg on Windows when the window is minimized. (Until it is restored, rendering will basically be paused.)

auto Swapchain::recreate(glm::ivec2 size) -> bool {

// Image sizes must be positive.

if (size.x <= 0 || size.y <= 0) { return false; }

auto const capabilities =

m_gpu.device.getSurfaceCapabilitiesKHR(m_ci.surface);

m_ci.setImageExtent(get_image_extent(capabilities, size))

.setMinImageCount(get_image_count(capabilities))

.setOldSwapchain(m_swapchain ? *m_swapchain : vk::SwapchainKHR{})

.setQueueFamilyIndices(m_gpu.queue_family);

assert(m_ci.imageExtent.width > 0 && m_ci.imageExtent.height > 0 &&

m_ci.minImageCount >= min_images_v);

// wait for the device to be idle before destroying the current swapchain.

m_device.waitIdle();

m_swapchain = m_device.createSwapchainKHRUnique(m_ci);

return true;

}

After successful recreation we want to fill up those vectors of images and views. For the images we use a more verbose approach to avoid having to assign the member vector to a newly returned one every time:

void require_success(vk::Result const result, char const* error_msg) {

if (result != vk::Result::eSuccess) { throw std::runtime_error{error_msg}; }

}

// ...

void Swapchain::populate_images() {

// we use the more verbose two-call API to avoid assigning m_images to a new

// vector on every call.

auto image_count = std::uint32_t{};

auto result =

m_device.getSwapchainImagesKHR(*m_swapchain, &image_count, nullptr);

require_success(result, "Failed to get Swapchain Images");

m_images.resize(image_count);

result = m_device.getSwapchainImagesKHR(*m_swapchain, &image_count,

m_images.data());

require_success(result, "Failed to get Swapchain Images");

}

Creation of the views is fairly straightforward:

void Swapchain::create_image_views() {

auto subresource_range = vk::ImageSubresourceRange{};

// this is a color image with 1 layer and 1 mip-level (the default).

subresource_range.setAspectMask(vk::ImageAspectFlagBits::eColor)

.setLayerCount(1)

.setLevelCount(1);

auto image_view_ci = vk::ImageViewCreateInfo{};

// set common parameters here (everything except the Image).

image_view_ci.setViewType(vk::ImageViewType::e2D)

.setFormat(m_ci.imageFormat)

.setSubresourceRange(subresource_range);

m_image_views.clear();

m_image_views.reserve(m_images.size());

for (auto const image : m_images) {

image_view_ci.setImage(image);

m_image_views.push_back(m_device.createImageViewUnique(image_view_ci));

}

}

We can now call these functions in recreate(), before return true, and add a log for some feedback:

populate_images();

create_image_views();

size = get_size();

std::println("[lvk] Swapchain [{}x{}]", size.x, size.y);

return true;

The log can get a bit noisy on incessant resizing (especially on Linux).

To get the framebuffer size, add a helper function in window.hpp/cpp:

auto glfw::framebuffer_size(GLFWwindow* window) -> glm::ivec2 {

auto ret = glm::ivec2{};

glfwGetFramebufferSize(window, &ret.x, &ret.y);

return ret;

}

Finally, add a std::optional<Swapchain> member to App after m_device, add the create function, and call it after create_device():

std::optional<Swapchain> m_swapchain{};

// ...

void App::create_swapchain() {

auto const size = glfw::framebuffer_size(m_window.get());

m_swapchain.emplace(*m_device, m_gpu, *m_surface, size);

}

Rendering

This section implements Render Sync, the Swapchain loop, performs Swapchain image layout transitions, and introduces Dynamic Rendering. Originally Vulkan only supported Render Passes, which are quite verbose to setup, require somewhat confusing subpass dependencies, and are ironically less explicit: they can perform implicit layout transitions on their framebuffer attachments. They are also tightly coupled to Graphics Pipelines, you need a separate pipeline object for each Render Pass, even if they are identical in all other respects. This RenderPass/Subpass model was primarily beneficial for GPUs with tiled renderers, and in Vulkan 1.3 Dynamic Rendering was promoted to the core API (previously it was an extension) as an alternative to using Render Passes.

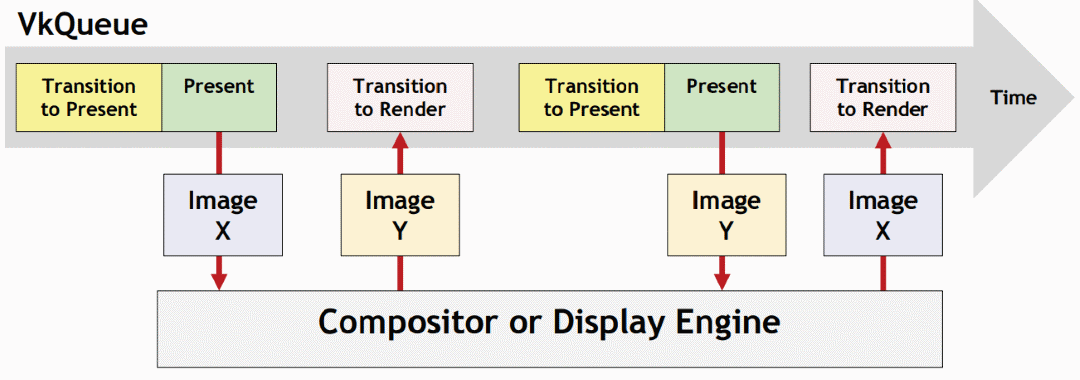

Swapchain Loop

One part of rendering in the main loop is the Swapchain loop, which at a high level comprises of these steps:

- Acquire a Swapchain Image

- Render to the acquired Image

- Present the Image (this releases the image back to the Swapchain)

There are a few nuances to deal with, for instance:

- Acquiring (and/or presenting) will sometimes fail (eg because the Swapchain is out of date), in which case the remaining steps need to be skipped

- The acquire command can return before the image is actually ready for use, rendering needs to be synchronized to only start after the image is ready

- Similarly, presentation needs to be synchronized to only occur after rendering has completed

- The images need appropriate Layout Transitions at each stage

Additionally, the number of swapchain images can vary, whereas the engine should use a fixed number of virtual frames: 2 for double buffering, 3 for triple (more is usually overkill). More info is available here. It's also possible for the main loop to acquire the same image before a previous render command has finished (or even started), if the Swapchain is using Mailbox Present Mode. While FIFO will block until the oldest submitted image is available (also known as vsync), we should still synchronize and wait until the acquired image has finished rendering.

Virtual Frames

All the dynamic resources used during the rendering of a frame comprise a virtual frame. The application has a fixed number of virtual frames which it cycles through on each render pass. For synchronization, each frame will be associated with a vk::Fence which will be waited on before rendering to it again. It will also have a vk::Semaphore to synchronize the acquire and render calls on the GPU (we don't need to wait for them in the code). For recording commands, there will be a vk::CommandBuffer per virtual frame, where all rendering commands for that frame (including layout transitions) will be recorded.

Presentation will require a semaphore for synchronization too, but since the swapchain loop waits on the drawn fence, which will be pre-signaled for each virtual frame on first use, present semaphores cannot be part of the virtual frame. It is possible to acquire another image and submit commands with a present semaphore that has not been signaled yet - this is invalid. Thus, these semaphores will be tied to the swapchain images (associated with their indices), and recreated with it.

Image Layouts

Vulkan Images have a property known as Image Layout. Most operations on images and their subresources require them to be in certain specific layouts, requiring transitions before (and after). A layout transition conveniently also functions as a Pipeline Barrier (think memory barrier on the GPU), enabling us to synchronize operations before and after the transition.

Vulkan Synchronization is arguably the most complicated aspect of the API, a good amount of research is recommended. Here is an article explaining barriers.

Render Sync

Create a new header resource_buffering.hpp:

// Number of virtual frames.

inline constexpr std::size_t buffering_v{2};

// Alias for N-buffered resources.

template <typename Type>

using Buffered = std::array<Type, buffering_v>;

Add a private struct RenderSync to App:

struct RenderSync {

// signaled when Swapchain image has been acquired.

vk::UniqueSemaphore draw{};

// signaled with present Semaphore, waited on before next render.

vk::UniqueFence drawn{};

// used to record rendering commands.

vk::CommandBuffer command_buffer{};

};

Add the new members associated with the Swapchain loop:

// command pool for all render Command Buffers.

vk::UniqueCommandPool m_render_cmd_pool{};

// Sync and Command Buffer for virtual frames.

Buffered<RenderSync> m_render_sync{};

// Current virtual frame index.

std::size_t m_frame_index{};

Add, implement, and call the create function:

void App::create_render_sync() {

// Command Buffers are 'allocated' from a Command Pool (which is 'created'

// like all other Vulkan objects so far). We can allocate all the buffers

// from a single pool here.

auto command_pool_ci = vk::CommandPoolCreateInfo{};

// this flag enables resetting the command buffer for re-recording (unlike a

// single-time submit scenario).

command_pool_ci.setFlags(vk::CommandPoolCreateFlagBits::eResetCommandBuffer)

.setQueueFamilyIndex(m_gpu.queue_family);

m_render_cmd_pool = m_device->createCommandPoolUnique(command_pool_ci);

auto command_buffer_ai = vk::CommandBufferAllocateInfo{};

command_buffer_ai.setCommandPool(*m_render_cmd_pool)

.setCommandBufferCount(static_cast<std::uint32_t>(resource_buffering_v))

.setLevel(vk::CommandBufferLevel::ePrimary);

auto const command_buffers =

m_device->allocateCommandBuffers(command_buffer_ai);

assert(command_buffers.size() == m_render_sync.size());

// we create Render Fences as pre-signaled so that on the first render for

// each virtual frame we don't wait on their fences (since there's nothing

// to wait for yet).

static constexpr auto fence_create_info_v =

vk::FenceCreateInfo{vk::FenceCreateFlagBits::eSignaled};

for (auto [sync, command_buffer] :

std::views::zip(m_render_sync, command_buffers)) {

sync.command_buffer = command_buffer;

sync.draw = m_device->createSemaphoreUnique({});

sync.drawn = m_device->createFenceUnique(fence_create_info_v);

}

}

Swapchain Update

Add a vector of semaphores and populate them in recreate():

void create_present_semaphores();

// ...

// signaled when image is ready to be presented.

std::vector<vk::UniqueSemaphore> m_present_semaphores{};

// ...

auto Swapchain::recreate(glm::ivec2 size) -> bool {

// ...

populate_images();

create_image_views();

// recreate present semaphores as the image count might have changed.

create_present_semaphores();

// ...

}

void Swapchain::create_present_semaphores() {

m_present_semaphores.clear();

m_present_semaphores.resize(m_images.size());

for (auto& semaphore : m_present_semaphores) {

semaphore = m_device.createSemaphoreUnique({});

}

}

Add a function to get the present semaphore corresponding to the acquired image, this will be signaled by render command submission:

auto Swapchain::get_present_semaphore() const -> vk::Semaphore {

return *m_present_semaphores.at(m_image_index.value());

}

Swapchain acquire/present operations can have various results. We constrain ourselves to the following:

eSuccess: all goodeSuboptimalKHR: also all good (not an error, and this is unlikely to occur on a desktop)eErrorOutOfDateKHR: Swapchain needs to be recreated- Any other

vk::Result: fatal/unexpected error

Expressing as a helper function in swapchain.cpp:

auto needs_recreation(vk::Result const result) -> bool {

switch (result) {

case vk::Result::eSuccess:

case vk::Result::eSuboptimalKHR: return false;

case vk::Result::eErrorOutOfDateKHR: return true;

default: break;

}

throw std::runtime_error{"Swapchain Error"};

}

We also want to return the Image, Image View, and size upon successful acquisition of the underlying Swapchain Image. Wrapping those in a struct:

struct RenderTarget {

vk::Image image{};

vk::ImageView image_view{};

vk::Extent2D extent{};

};

VulkanHPP's primary API throws if the vk::Result corresponds to an error (based on the spec). eErrorOutOfDateKHR is technically an error, but it's quite possible to get it when the framebuffer size doesn't match the Swapchain size. To avoid having to deal with exceptions here, we use the alternate API for the acquire and present calls (overloads distinguished by pointer arguments and/or out parameters, and returning a vk::Result).

Implementing the acquire operation:

auto Swapchain::acquire_next_image(vk::Semaphore const to_signal)

-> std::optional<RenderTarget> {

assert(!m_image_index);

static constexpr auto timeout_v = std::numeric_limits<std::uint64_t>::max();

// avoid VulkanHPP ErrorOutOfDateKHR exceptions by using alternate API that

// returns a Result.

auto image_index = std::uint32_t{};

auto const result = m_device.acquireNextImageKHR(

*m_swapchain, timeout_v, to_signal, {}, &image_index);

if (needs_recreation(result)) { return {}; }

m_image_index = static_cast<std::size_t>(image_index);

return RenderTarget{

.image = m_images.at(*m_image_index),

.image_view = *m_image_views.at(*m_image_index),

.extent = m_ci.imageExtent,

};

}

Similarly, present:

auto Swapchain::present(vk::Queue const queue)

-> bool {

auto const image_index = static_cast<std::uint32_t>(m_image_index.value());

auto const wait_semaphore =

*m_present_semaphores.at(static_cast<std::size_t>(image_index));

auto present_info = vk::PresentInfoKHR{};

present_info.setSwapchains(*m_swapchain)

.setImageIndices(image_index)

.setWaitSemaphores(wait_semaphore);

// avoid VulkanHPP ErrorOutOfDateKHR exceptions by using alternate API.

auto const result = queue.presentKHR(&present_info);

m_image_index.reset();

return !needs_recreation(result);

}

It is the responsibility of the user (class App) to recreate the Swapchain on receiving std::nullopt / false return values for either operation. Users will also need to transition the layouts of the returned images between acquire and present operations. Add a helper to assist in that process, and extract the Image Subresource Range out as a common constant:

constexpr auto subresource_range_v = [] {

auto ret = vk::ImageSubresourceRange{};

// this is a color image with 1 layer and 1 mip-level (the default).

ret.setAspectMask(vk::ImageAspectFlagBits::eColor)

.setLayerCount(1)

.setLevelCount(1);

return ret;

}();

// ...

auto Swapchain::base_barrier() const -> vk::ImageMemoryBarrier2 {

// fill up the parts common to all barriers.

auto ret = vk::ImageMemoryBarrier2{};

ret.setImage(m_images.at(m_image_index.value()))

.setSubresourceRange(subresource_range_v)

.setSrcQueueFamilyIndex(m_gpu.queue_family)

.setDstQueueFamilyIndex(m_gpu.queue_family);

return ret;

}

Dynamic Rendering

Dynamic Rendering enables us to avoid using Render Passes, which are quite a bit more verbose (but also generally more performant on tiled GPUs). Here we tie together the Swapchain, Render Sync, and rendering. We are not ready to actually render anything yet, but can clear the image to a particular color.

Add these new members to App:

auto acquire_render_target() -> bool;

auto begin_frame() -> vk::CommandBuffer;

void transition_for_render(vk::CommandBuffer command_buffer) const;

void render(vk::CommandBuffer command_buffer);

void transition_for_present(vk::CommandBuffer command_buffer) const;

void submit_and_present();

// ...

glm::ivec2 m_framebuffer_size{};

std::optional<RenderTarget> m_render_target{};

The main loop can now use these to implement the Swapchain and rendering loop:

while (glfwWindowShouldClose(m_window.get()) == GLFW_FALSE) {

glfwPollEvents();

if (!acquire_render_target()) { continue; }

auto const command_buffer = begin_frame();

transition_for_render(command_buffer);

render(command_buffer);

transition_for_present(command_buffer);

submit_and_present();

}

Before acquiring a Swapchain image, we need to wait for the current frame's fence. If acquisition is successful, reset the fence ('un'signal it):

auto App::acquire_render_target() -> bool {

m_framebuffer_size = glfw::framebuffer_size(m_window.get());

// minimized? skip loop.

if (m_framebuffer_size.x <= 0 || m_framebuffer_size.y <= 0) {

return false;

}

auto& render_sync = m_render_sync.at(m_frame_index);

// wait for the fence to be signaled.

static constexpr auto fence_timeout_v =

static_cast<std::uint64_t>(std::chrono::nanoseconds{3s}.count());

auto result =

m_device->waitForFences(*render_sync.drawn, vk::True, fence_timeout_v);

if (result != vk::Result::eSuccess) {

throw std::runtime_error{"Failed to wait for Render Fence"};

}

m_render_target = m_swapchain->acquire_next_image(*render_sync.draw);

if (!m_render_target) {

// acquire failure => ErrorOutOfDate. Recreate Swapchain.

m_swapchain->recreate(m_framebuffer_size);

return false;

}

// reset fence _after_ acquisition of image: if it fails, the

// fence remains signaled.

m_device->resetFences(*render_sync.drawn);

return true;

}

Since the fence has been reset, a queue submission must be made that signals it before continuing, otherwise the app will deadlock on the next wait (and eventually throw after 3s). Begin Command Buffer recording:

auto App::begin_frame() -> vk::CommandBuffer {

auto const& render_sync = m_render_sync.at(m_frame_index);

auto command_buffer_bi = vk::CommandBufferBeginInfo{};

// this flag means recorded commands will not be reused.

command_buffer_bi.setFlags(vk::CommandBufferUsageFlagBits::eOneTimeSubmit);

render_sync.command_buffer.begin(command_buffer_bi);

return render_sync.command_buffer;

}

Transition the image for rendering, ie Attachment Optimal layout. Set up the image barrier and record it:

void App::transition_for_render(vk::CommandBuffer const command_buffer) const {

auto dependency_info = vk::DependencyInfo{};

auto barrier = m_swapchain->base_barrier();

// Undefined => AttachmentOptimal

// the barrier must wait for prior color attachment operations to complete,

// and block subsequent ones.

barrier.setOldLayout(vk::ImageLayout::eUndefined)

.setNewLayout(vk::ImageLayout::eAttachmentOptimal)

.setSrcAccessMask(vk::AccessFlagBits2::eColorAttachmentRead |

vk::AccessFlagBits2::eColorAttachmentWrite)

.setSrcStageMask(vk::PipelineStageFlagBits2::eColorAttachmentOutput)

.setDstAccessMask(barrier.srcAccessMask)

.setDstStageMask(barrier.srcStageMask);

dependency_info.setImageMemoryBarriers(barrier);

command_buffer.pipelineBarrier2(dependency_info);

}

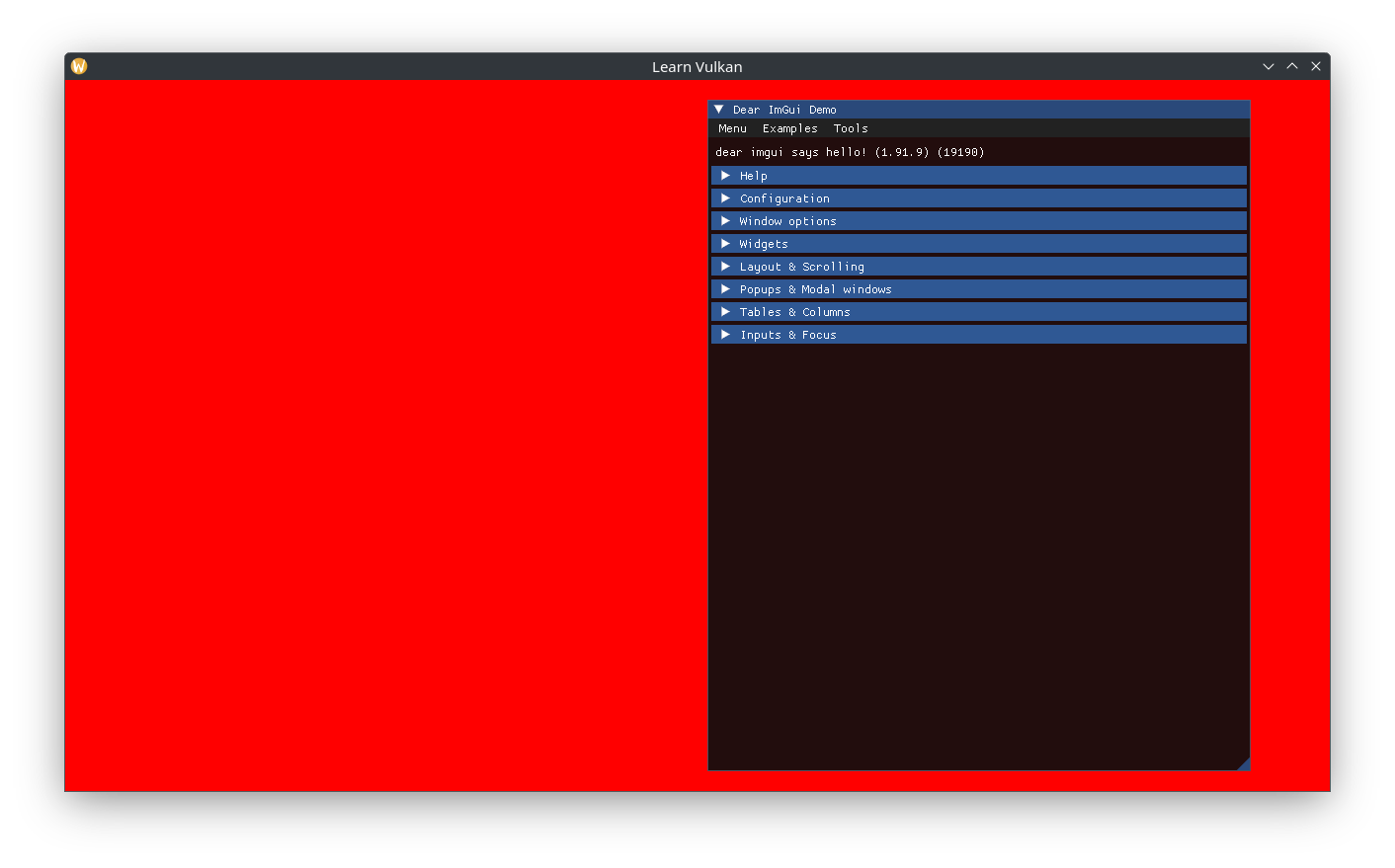

Create a Rendering Attachment Info using the acquired image as the color target. We use a red clear color, make sure the Load Op clears the image, and Store Op stores the results (currently just the cleared image). Set up a Rendering Info object with the color attachment and the entire image as the render area. Finally, execute the render:

void App::render(vk::CommandBuffer const command_buffer) {

auto color_attachment = vk::RenderingAttachmentInfo{};

color_attachment.setImageView(m_render_target->image_view)

.setImageLayout(vk::ImageLayout::eAttachmentOptimal)

.setLoadOp(vk::AttachmentLoadOp::eClear)

.setStoreOp(vk::AttachmentStoreOp::eStore)

// temporarily red.

.setClearValue(vk::ClearColorValue{1.0f, 0.0f, 0.0f, 1.0f});

auto rendering_info = vk::RenderingInfo{};

auto const render_area =

vk::Rect2D{vk::Offset2D{}, m_render_target->extent};

rendering_info.setRenderArea(render_area)

.setColorAttachments(color_attachment)

.setLayerCount(1);

command_buffer.beginRendering(rendering_info);

// draw stuff here.

command_buffer.endRendering();

}

Transition the image for presentation:

void App::transition_for_present(vk::CommandBuffer const command_buffer) const {

auto dependency_info = vk::DependencyInfo{};

auto barrier = m_swapchain->base_barrier();

// AttachmentOptimal => PresentSrc

// the barrier must wait for prior color attachment operations to complete,

// and block subsequent ones.

barrier.setOldLayout(vk::ImageLayout::eAttachmentOptimal)

.setNewLayout(vk::ImageLayout::ePresentSrcKHR)

.setSrcAccessMask(vk::AccessFlagBits2::eColorAttachmentRead |

vk::AccessFlagBits2::eColorAttachmentWrite)

.setSrcStageMask(vk::PipelineStageFlagBits2::eColorAttachmentOutput)

.setDstAccessMask(barrier.srcAccessMask)

.setDstStageMask(barrier.srcStageMask);

dependency_info.setImageMemoryBarriers(barrier);

command_buffer.pipelineBarrier2(dependency_info);

}

End the command buffer and submit it. The draw Semaphore will be signaled by the Swapchain when the image is ready, which will trigger this command buffer's execution. It will signal the present Semaphore and drawn Fence on completion, with the latter being waited on the next time this virtual frame is processed. Finally, we increment the frame index, pass the present semaphore as the one for the subsequent present operation to wait on:

void App::submit_and_present() {

auto const& render_sync = m_render_sync.at(m_frame_index);

render_sync.command_buffer.end();

auto submit_info = vk::SubmitInfo2{};

auto const command_buffer_info =

vk::CommandBufferSubmitInfo{render_sync.command_buffer};

auto wait_semaphore_info = vk::SemaphoreSubmitInfo{};

wait_semaphore_info.setSemaphore(*render_sync.draw)

.setStageMask(vk::PipelineStageFlagBits2::eColorAttachmentOutput);

auto signal_semaphore_info = vk::SemaphoreSubmitInfo{};

signal_semaphore_info.setSemaphore(m_swapchain->get_present_semaphore())

.setStageMask(vk::PipelineStageFlagBits2::eColorAttachmentOutput);

submit_info.setCommandBufferInfos(command_buffer_info)

.setWaitSemaphoreInfos(wait_semaphore_info)

.setSignalSemaphoreInfos(signal_semaphore_info);

m_queue.submit2(submit_info, *render_sync.drawn);

m_frame_index = (m_frame_index + 1) % m_render_sync.size();

m_render_target.reset();

// an eErrorOutOfDateKHR result is not guaranteed if the

// framebuffer size does not match the Swapchain image size, check it

// explicitly.

auto const fb_size_changed = m_framebuffer_size != m_swapchain->get_size();

auto const out_of_date = !m_swapchain->present(m_queue);

if (fb_size_changed || out_of_date) {

m_swapchain->recreate(m_framebuffer_size);

}

}

Wayland users: congratulaions, you can finally see and interact with the window!

Render Doc on Wayland

At the time of writing, RenderDoc doesn't support inspecting Wayland applications. Temporarily force X11 (XWayland) by calling glfwInitHint() before glfwInit():

glfwInitHint(GLFW_PLATFORM, GLFW_PLATFORM_X11);

Setting up a command line option to conditionally call this is a simple and flexible approach: just set that argument in RenderDoc itself and/or pass it whenever an X11 backend is desired:

// main.cpp

// skip the first argument.

auto args = std::span{argv, static_cast<std::size_t>(argc)}.subspan(1);

while (!args.empty()) {

auto const arg = std::string_view{args.front()};

if (arg == "-x" || arg == "--force-x11") {

glfwInitHint(GLFW_PLATFORM, GLFW_PLATFORM_X11);

}

args = args.subspan(1);

}

lvk::App{}.run();

Dear ImGui

Dear ImGui does not have native CMake support, and while adding the sources to the executable is an option, we will add it as an external library target: imgui to isolate it (and compile warnings etc) from our own code. This requires some changes to the ext target structure, since imgui will itself need to link to GLFW and Vulkan-Headers, have VK_NO_PROTOTYPES defined, etc. learn-vk-ext then links to imgui and any other libraries (currently only glm). We are using Dear ImGui v1.91.9, which has decent support for Dynamic Rendering.

class DearImGui

Dear ImGui has its own initialization and loop, which we encapsulate into class DearImGui:

struct DearImGuiCreateInfo {

GLFWwindow* window{};

std::uint32_t api_version{};

vk::Instance instance{};

vk::PhysicalDevice physical_device{};

std::uint32_t queue_family{};

vk::Device device{};

vk::Queue queue{};

vk::Format color_format{}; // single color attachment.

vk::SampleCountFlagBits samples{};

};

class DearImGui {

public:

using CreateInfo = DearImGuiCreateInfo;

explicit DearImGui(CreateInfo const& create_info);

void new_frame();

void end_frame();

void render(vk::CommandBuffer command_buffer) const;

private:

enum class State : std::int8_t { Ended, Begun };

struct Deleter {

void operator()(vk::Device device) const;

};

State m_state{};

Scoped<vk::Device, Deleter> m_device{};

};

In the constructor, we start by creating the ImGui Context, loading Vulkan functions, and initializing GLFW for Vulkan:

IMGUI_CHECKVERSION();

ImGui::CreateContext();

static auto const load_vk_func = +[](char const* name, void* user_data) {

return VULKAN_HPP_DEFAULT_DISPATCHER.vkGetInstanceProcAddr(

*static_cast<vk::Instance*>(user_data), name);

};

auto instance = create_info.instance;

ImGui_ImplVulkan_LoadFunctions(create_info.api_version, load_vk_func,

&instance);

if (!ImGui_ImplGlfw_InitForVulkan(create_info.window, true)) {

throw std::runtime_error{"Failed to initialize Dear ImGui"};

}

Then initialize Dear ImGui for Vulkan:

auto init_info = ImGui_ImplVulkan_InitInfo{};

init_info.ApiVersion = create_info.api_version;

init_info.Instance = create_info.instance;

init_info.PhysicalDevice = create_info.physical_device;

init_info.Device = create_info.device;

init_info.QueueFamily = create_info.queue_family;

init_info.Queue = create_info.queue;

init_info.MinImageCount = 2;

init_info.ImageCount = static_cast<std::uint32_t>(resource_buffering_v);

init_info.MSAASamples =

static_cast<VkSampleCountFlagBits>(create_info.samples);

init_info.DescriptorPoolSize = 2;

auto pipline_rendering_ci = vk::PipelineRenderingCreateInfo{};

pipline_rendering_ci.setColorAttachmentCount(1).setColorAttachmentFormats(

create_info.color_format);

init_info.PipelineRenderingCreateInfo = pipline_rendering_ci;

init_info.UseDynamicRendering = true;

if (!ImGui_ImplVulkan_Init(&init_info)) {

throw std::runtime_error{"Failed to initialize Dear ImGui"};

}

ImGui_ImplVulkan_CreateFontsTexture();

Since we are using an sRGB format and Dear ImGui is not color-space aware, we need to convert its style colors to linear space (so that they shift back to the original values by gamma correction):

ImGui::StyleColorsDark();

// NOLINTNEXTLINE(cppcoreguidelines-pro-bounds-array-to-pointer-decay)

for (auto& colour : ImGui::GetStyle().Colors) {

auto const linear = glm::convertSRGBToLinear(

glm::vec4{colour.x, colour.y, colour.z, colour.w});

colour = ImVec4{linear.x, linear.y, linear.z, linear.w};

}

ImGui::GetStyle().Colors[ImGuiCol_WindowBg].w = 0.99f; // more opaque

Finally, create the deleter and its implementation:

m_device = Scoped<vk::Device, Deleter>{create_info.device};

// ...

void DearImGui::Deleter::operator()(vk::Device const device) const {

device.waitIdle();

ImGui_ImplVulkan_DestroyFontsTexture();

ImGui_ImplVulkan_Shutdown();

ImGui_ImplGlfw_Shutdown();

ImGui::DestroyContext();

}

The remaining functions are straightforward:

void DearImGui::new_frame() {

if (m_state == State::Begun) { end_frame(); }

ImGui_ImplGlfw_NewFrame();

ImGui_ImplVulkan_NewFrame();

ImGui::NewFrame();

m_state = State::Begun;

}

void DearImGui::end_frame() {

if (m_state == State::Ended) { return; }

ImGui::Render();

m_state = State::Ended;

}

// NOLINTNEXTLINE(readability-convert-member-functions-to-static)

void DearImGui::render(vk::CommandBuffer const command_buffer) const {

auto* data = ImGui::GetDrawData();

if (data == nullptr) { return; }

ImGui_ImplVulkan_RenderDrawData(data, command_buffer);

}

ImGui Integration

Update Swapchain to expose its image format:

[[nodiscard]] auto get_format() const -> vk::Format {

return m_ci.imageFormat;

}

class App can now store a std::optional<DearImGui> member and add/call its create function:

void App::create_imgui() {

auto const imgui_ci = DearImGui::CreateInfo{

.window = m_window.get(),

.api_version = vk_version_v,

.instance = *m_instance,

.physical_device = m_gpu.device,

.queue_family = m_gpu.queue_family,

.device = *m_device,

.queue = m_queue,

.color_format = m_swapchain->get_format(),

.samples = vk::SampleCountFlagBits::e1,

};

m_imgui.emplace(imgui_ci);

}

Start a new ImGui frame after resetting the render fence, and show the demo window:

m_device->resetFences(*render_sync.drawn);

m_imgui->new_frame();

// ...

command_buffer.beginRendering(rendering_info);

ImGui::ShowDemoWindow();

// draw stuff here.

command_buffer.endRendering();

ImGui doesn't draw anything here (the actual draw command requires the Command Buffer), it's just a good customization point for all higher level logic.

We use a separate render pass for Dear ImGui, again for isolation, and to enable us to change the main render pass later, eg by adding a depth buffer attachment (DearImGui is setup assuming its render pass will only use a single color attachment).

m_imgui->end_frame();

// we don't want to clear the image again, instead load it intact after the

// previous pass.

color_attachment.setLoadOp(vk::AttachmentLoadOp::eLoad);

rendering_info.setColorAttachments(color_attachment)

.setPDepthAttachment(nullptr);

command_buffer.beginRendering(rendering_info);

m_imgui->render(command_buffer);

command_buffer.endRendering();

Shader Objects

A Vulkan Graphics Pipeline is a large object that encompasses the entire graphics pipeline. It consists of many stages - all this happens during a single draw() call. There is however an extension called VK_EXT_shader_object which enables avoiding graphics pipelines entirely. Almost all pipeline state becomes dynamic, ie set at draw time, and the only Vulkan handles to own are ShaderEXT objects. For a comprehensive guide, check out the Vulkan Sample from Khronos.

Vulkan requires shader code to be provided as SPIR-V (IR). We shall use glslc (part of the Vulkan SDK) to compile GLSL to SPIR-V manually when required.

Locating Assets

Before we can use shaders, we need to load them as asset/data files. To do that correctly, first the asset directory needs to be located. There are a few ways to go about this, we will use the approach of looking for a particular subdirectory, starting from the working directory and walking up the parent directory tree. This enables app in any project/build subdirectory to locate assets/ in the various examples below:

.

|-- assets/

|-- app

|-- build/

|-- app

|-- out/

|-- default/Release/

|-- app

|-- ubsan/Debug/

|-- app

In a release package you would want to use the path to the executable instead (and probably not perform an "upfind" walk), the working directory could be anywhere whereas assets shipped with the package will be in the vicinity of the executable.

Assets Directory

Add a member to App to store this path to assets/:

namespace fs = std::filesystem;

// ...

fs::path m_assets_dir{};

Add a helper function to locate the assets dir, and assign m_assets_dir to its return value at the top of run():

[[nodiscard]] auto locate_assets_dir() -> fs::path {

// look for '<path>/assets/', starting from the working

// directory and walking up the parent directory tree.

static constexpr std::string_view dir_name_v{"assets"};

for (auto path = fs::current_path();

!path.empty() && path.has_parent_path(); path = path.parent_path()) {

auto ret = path / dir_name_v;

if (fs::is_directory(ret)) { return ret; }

}

std::println("[lvk] Warning: could not locate '{}' directory", dir_name_v);

return fs::current_path();

}

// ...

m_assets_dir = locate_assets_dir();

Shader Program

To use Shader Objects we need to enable the corresponding feature and extension during device creation:

auto shader_object_feature =

vk::PhysicalDeviceShaderObjectFeaturesEXT{vk::True};

dynamic_rendering_feature.setPNext(&shader_object_feature);

// ...

// we need two device extensions: Swapchain and Shader Object.

static constexpr auto extensions_v = std::array{

VK_KHR_SWAPCHAIN_EXTENSION_NAME,

"VK_EXT_shader_object",

};

Emulation Layer

It's possible device creation now fails because the driver or physical device does not support VK_EXT_shader_object (especially likely with Intel). Vulkan SDK provides a layer that implements this extension: VK_LAYER_KHRONOS_shader_object. Adding this layer to the Instance Create Info should unblock usage of this feature:

// ...

// add the Shader Object emulation layer.

static constexpr auto layers_v = std::array{

"VK_LAYER_KHRONOS_shader_object",

};

instance_ci.setPEnabledLayerNames(layers_v);

m_instance = vk::createInstanceUnique(instance_ci);

// ...

Since desired layers may not be available, we can set up a defensive check:

[[nodiscard]] auto get_layers(std::span<char const* const> desired)

-> std::vector<char const*> {

auto ret = std::vector<char const*>{};

ret.reserve(desired.size());

auto const available = vk::enumerateInstanceLayerProperties();

for (char const* layer : desired) {

auto const pred = [layer = std::string_view{layer}](

vk::LayerProperties const& properties) {

return properties.layerName == layer;

};

if (std::ranges::find_if(available, pred) == available.end()) {

std::println("[lvk] [WARNING] Vulkan Layer '{}' not found", layer);

continue;

}

ret.push_back(layer);

}

return ret;

}

// ...

auto const layers = get_layers(layers_v);

instance_ci.setPEnabledLayerNames(layers);

class ShaderProgram

We will encapsulate both vertex and fragment shaders into a single ShaderProgram, which will also bind the shaders before a draw, and expose/set various dynamic states.

In shader_program.hpp, first add a ShaderProgramCreateInfo struct:

struct ShaderProgramCreateInfo {

vk::Device device;

std::span<std::uint32_t const> vertex_spirv;

std::span<std::uint32_t const> fragment_spirv;

std::span<vk::DescriptorSetLayout const> set_layouts;

};

Descriptor Sets and their Layouts will be covered later.

Start with a skeleton definition:

class ShaderProgram {

public:

using CreateInfo = ShaderProgramCreateInfo;

explicit ShaderProgram(CreateInfo const& create_info);

private:

std::vector<vk::UniqueShaderEXT> m_shaders{};

ScopedWaiter m_waiter{};

};

The definition of the constructor is fairly straightforward:

ShaderProgram::ShaderProgram(CreateInfo const& create_info) {

auto const create_shader_ci =

[&create_info](std::span<std::uint32_t const> spirv) {

auto ret = vk::ShaderCreateInfoEXT{};

ret.setCodeSize(spirv.size_bytes())

.setPCode(spirv.data())

// set common parameters.

.setSetLayouts(create_info.set_layouts)

.setCodeType(vk::ShaderCodeTypeEXT::eSpirv)

.setPName("main");

return ret;

};

auto shader_cis = std::array{

create_shader_ci(create_info.vertex_spirv),

create_shader_ci(create_info.fragment_spirv),

};

shader_cis[0]

.setStage(vk::ShaderStageFlagBits::eVertex)

.setNextStage(vk::ShaderStageFlagBits::eFragment);

shader_cis[1].setStage(vk::ShaderStageFlagBits::eFragment);

auto result = create_info.device.createShadersEXTUnique(shader_cis);

if (result.result != vk::Result::eSuccess) {

throw std::runtime_error{"Failed to create Shader Objects"};

}

m_shaders = std::move(result.value);

m_waiter = create_info.device;

}

Expose some dynamic states via public members:

static constexpr auto color_blend_equation_v = [] {

auto ret = vk::ColorBlendEquationEXT{};

ret.setColorBlendOp(vk::BlendOp::eAdd)

// standard alpha blending:

// (alpha * src) + (1 - alpha) * dst

.setSrcColorBlendFactor(vk::BlendFactor::eSrcAlpha)

.setDstColorBlendFactor(vk::BlendFactor::eOneMinusSrcAlpha);

return ret;

}();

// ...

vk::PrimitiveTopology topology{vk::PrimitiveTopology::eTriangleList};

vk::PolygonMode polygon_mode{vk::PolygonMode::eFill};

float line_width{1.0f};

vk::ColorBlendEquationEXT color_blend_equation{color_blend_equation_v};

vk::CompareOp depth_compare_op{vk::CompareOp::eLessOrEqual};

Encapsulate booleans into bit flags:

// bit flags for various binary states.

enum : std::uint8_t {

None = 0,

AlphaBlend = 1 << 0, // turn on alpha blending.

DepthTest = 1 << 1, // turn on depth write and test.

};

// ...

static constexpr auto flags_v = AlphaBlend | DepthTest;

// ...

std::uint8_t flags{flags_v};

There is one more piece of pipeline state needed: vertex input. We will consider this to be constant per shader and store it in the constructor:

// shader_program.hpp

// vertex attributes and bindings.

struct ShaderVertexInput {

std::span<vk::VertexInputAttributeDescription2EXT const> attributes{};

std::span<vk::VertexInputBindingDescription2EXT const> bindings{};

};

struct ShaderProgramCreateInfo {

// ...

ShaderVertexInput vertex_input{};

// ...

};

class ShaderProgram {

// ...

ShaderVertexInput m_vertex_input{};

std::vector<vk::UniqueShaderEXT> m_shaders{};

// ...

};

// shader_program.cpp

ShaderProgram::ShaderProgram(CreateInfo const& create_info)

: m_vertex_input(create_info.vertex_input) {

// ...

}

The API to bind will take the command buffer and the framebuffer size (to set the viewport and scissor):

void bind(vk::CommandBuffer command_buffer,

glm::ivec2 framebuffer_size) const;

Add helper member functions and implement bind() by calling them in succession:

static void set_viewport_scissor(vk::CommandBuffer command_buffer,

glm::ivec2 framebuffer);

static void set_static_states(vk::CommandBuffer command_buffer);

void set_common_states(vk::CommandBuffer command_buffer) const;

void set_vertex_states(vk::CommandBuffer command_buffer) const;

void set_fragment_states(vk::CommandBuffer command_buffer) const;

void bind_shaders(vk::CommandBuffer command_buffer) const;

// ...

void ShaderProgram::bind(vk::CommandBuffer const command_buffer,

glm::ivec2 const framebuffer_size) const {

set_viewport_scissor(command_buffer, framebuffer_size);

set_static_states(command_buffer);

set_common_states(command_buffer);

set_vertex_states(command_buffer);

set_fragment_states(command_buffer);

bind_shaders(command_buffer);

}

Implementations are long but straightforward:

namespace {

constexpr auto to_vkbool(bool const value) {

return value ? vk::True : vk::False;

}

} // namespace

// ...

void ShaderProgram::set_viewport_scissor(vk::CommandBuffer const command_buffer,

glm::ivec2 const framebuffer_size) {

auto const fsize = glm::vec2{framebuffer_size};

auto viewport = vk::Viewport{};

// flip the viewport about the X-axis (negative height):

// https://www.saschawillems.de/blog/2019/03/29/flipping-the-vulkan-viewport/

viewport.setX(0.0f).setY(fsize.y).setWidth(fsize.x).setHeight(-fsize.y);

command_buffer.setViewportWithCount(viewport);

auto const usize = glm::uvec2{framebuffer_size};

auto const scissor =

vk::Rect2D{vk::Offset2D{}, vk::Extent2D{usize.x, usize.y}};

command_buffer.setScissorWithCount(scissor);

}

void ShaderProgram::set_static_states(vk::CommandBuffer const command_buffer) {

command_buffer.setRasterizerDiscardEnable(vk::False);

command_buffer.setRasterizationSamplesEXT(vk::SampleCountFlagBits::e1);

command_buffer.setSampleMaskEXT(vk::SampleCountFlagBits::e1, 0xff);

command_buffer.setAlphaToCoverageEnableEXT(vk::False);

command_buffer.setCullMode(vk::CullModeFlagBits::eNone);

command_buffer.setFrontFace(vk::FrontFace::eCounterClockwise);

command_buffer.setDepthBiasEnable(vk::False);

command_buffer.setStencilTestEnable(vk::False);

command_buffer.setPrimitiveRestartEnable(vk::False);

command_buffer.setColorWriteMaskEXT(0, ~vk::ColorComponentFlags{});

}

void ShaderProgram::set_common_states(

vk::CommandBuffer const command_buffer) const {

auto const depth_test = to_vkbool((flags & DepthTest) == DepthTest);

command_buffer.setDepthWriteEnable(depth_test);

command_buffer.setDepthTestEnable(depth_test);

command_buffer.setDepthCompareOp(depth_compare_op);

command_buffer.setPolygonModeEXT(polygon_mode);

command_buffer.setLineWidth(line_width);

}

void ShaderProgram::set_vertex_states(

vk::CommandBuffer const command_buffer) const {

command_buffer.setVertexInputEXT(m_vertex_input.bindings,

m_vertex_input.attributes);

command_buffer.setPrimitiveTopology(topology);

}

void ShaderProgram::set_fragment_states(

vk::CommandBuffer const command_buffer) const {

auto const alpha_blend = to_vkbool((flags & AlphaBlend) == AlphaBlend);

command_buffer.setColorBlendEnableEXT(0, alpha_blend);

command_buffer.setColorBlendEquationEXT(0, color_blend_equation);

}

void ShaderProgram::bind_shaders(vk::CommandBuffer const command_buffer) const {

static constexpr auto stages_v = std::array{

vk::ShaderStageFlagBits::eVertex,

vk::ShaderStageFlagBits::eFragment,

};

auto const shaders = std::array{

*m_shaders[0],

*m_shaders[1],

};

command_buffer.bindShadersEXT(stages_v, shaders);

}

GLSL to SPIR-V

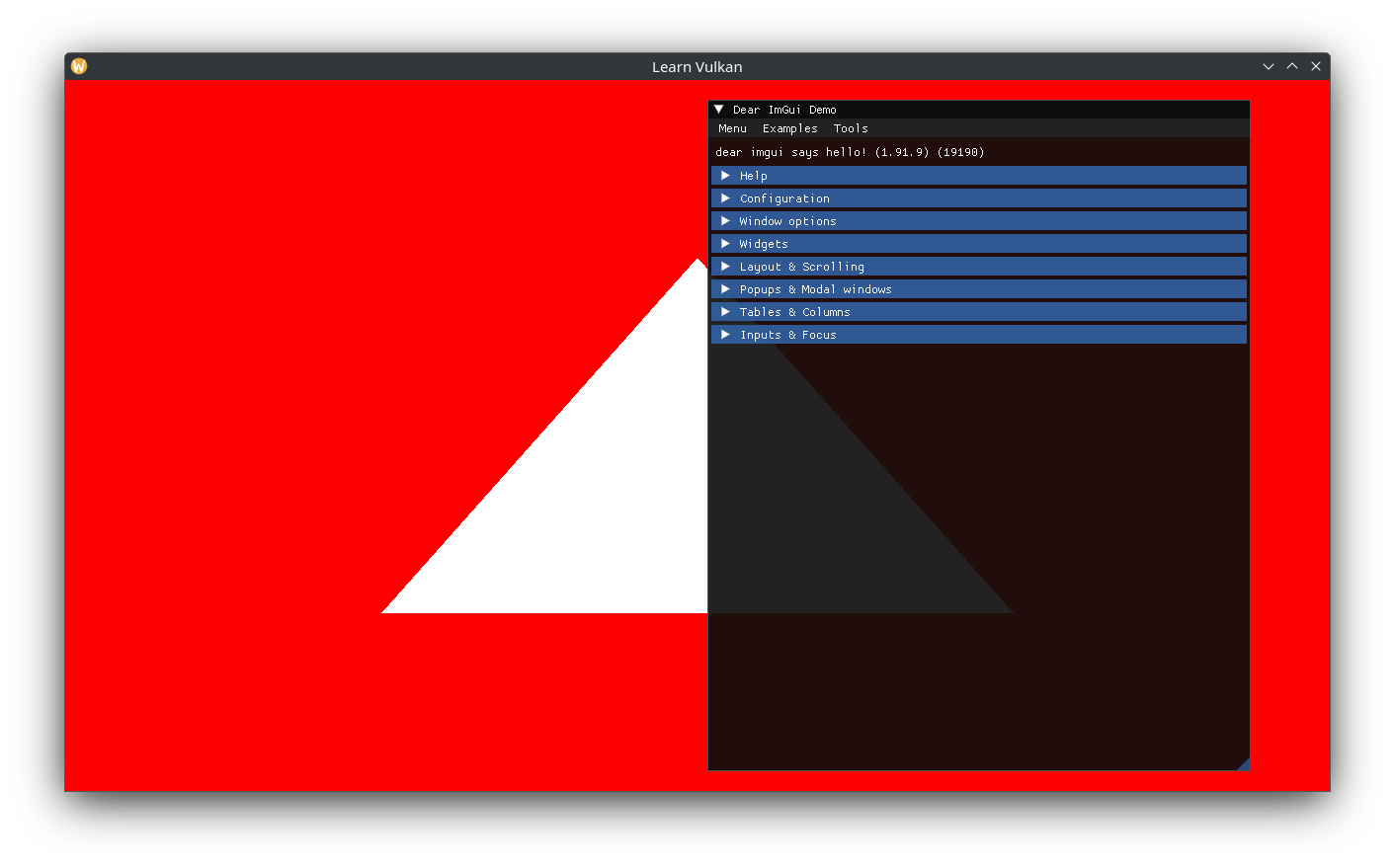

Shaders work in NDC space: -1 to +1 for X and Y. We output a triangle's coordinates in a new vertex shader and save it to src/glsl/shader.vert:

#version 450 core

void main() {

const vec2 positions[] = {

vec2(-0.5, -0.5),

vec2(0.5, -0.5),

vec2(0.0, 0.5),

};

const vec2 position = positions[gl_VertexIndex];

gl_Position = vec4(position, 0.0, 1.0);

}

The fragment shader just outputs white for now, in src/glsl/shader.frag:

#version 450 core

layout (location = 0) out vec4 out_color;

void main() {

out_color = vec4(1.0);

}

Compile both shaders into assets/:

glslc src/glsl/shader.vert -o assets/shader.vert

glslc src/glsl/shader.frag -o assets/shader.frag

glslc is part of the Vulkan SDK.

Loading SPIR-V

SPIR-V shaders are binary files with a stride/alignment of 4 bytes. As we have seen, the Vulkan API accepts a span of std::uint32_ts, so we need to load it into such a buffer (and not std::vector<std::byte> or other 1-byte equivalents). Add a helper function in app.cpp:

[[nodiscard]] auto to_spir_v(fs::path const& path)

-> std::vector<std::uint32_t> {

// open the file at the end, to get the total size.

auto file = std::ifstream{path, std::ios::binary | std::ios::ate};

if (!file.is_open()) {

throw std::runtime_error{

std::format("Failed to open file: '{}'", path.generic_string())};

}

auto const size = file.tellg();

auto const usize = static_cast<std::uint64_t>(size);

// file data must be uint32 aligned.

if (usize % sizeof(std::uint32_t) != 0) {

throw std::runtime_error{std::format("Invalid SPIR-V size: {}", usize)};

}

// seek to the beginning before reading.

file.seekg({}, std::ios::beg);

auto ret = std::vector<std::uint32_t>{};

ret.resize(usize / sizeof(std::uint32_t));

void* data = ret.data();

file.read(static_cast<char*>(data), size);

return ret;

}

Drawing a Triangle

Add a ShaderProgram to App and its create function:

[[nodiscard]] auto asset_path(std::string_view uri) const -> fs::path;

// ...

void create_shader();

// ...

std::optional<ShaderProgram> m_shader{};

Implement and call create_shader() (and asset_path()):

void App::create_shader() {

auto const vertex_spirv = to_spir_v(asset_path("shader.vert"));

auto const fragment_spirv = to_spir_v(asset_path("shader.frag"));

auto const shader_ci = ShaderProgram::CreateInfo{

.device = *m_device,

.vertex_spirv = vertex_spirv,

.fragment_spirv = fragment_spirv,

.vertex_input = {},

.set_layouts = {},

};

m_shader.emplace(shader_ci);

}

auto App::asset_path(std::string_view const uri) const -> fs::path {

return m_assets_dir / uri;

}

Before render() grows to an unwieldy size, extract the higher level logic into two member functions:

// ImGui code goes here.

void inspect();

// Issue draw calls here.

void draw(vk::CommandBuffer command_buffer) const;

// ...

void App::inspect() {

ImGui::ShowDemoWindow();

// TODO

}

// ...

command_buffer.beginRendering(rendering_info);

inspect();

draw(command_buffer);

command_buffer.endRendering();

We can now bind the shader and use it to draw the triangle in the shader. Making draw() const forces us to ensure no App state is changed:

void App::draw(vk::CommandBuffer const command_buffer) const {

m_shader->bind(command_buffer, m_framebuffer_size);

// current shader has hard-coded logic for 3 vertices.

command_buffer.draw(3, 1, 0, 0);

}

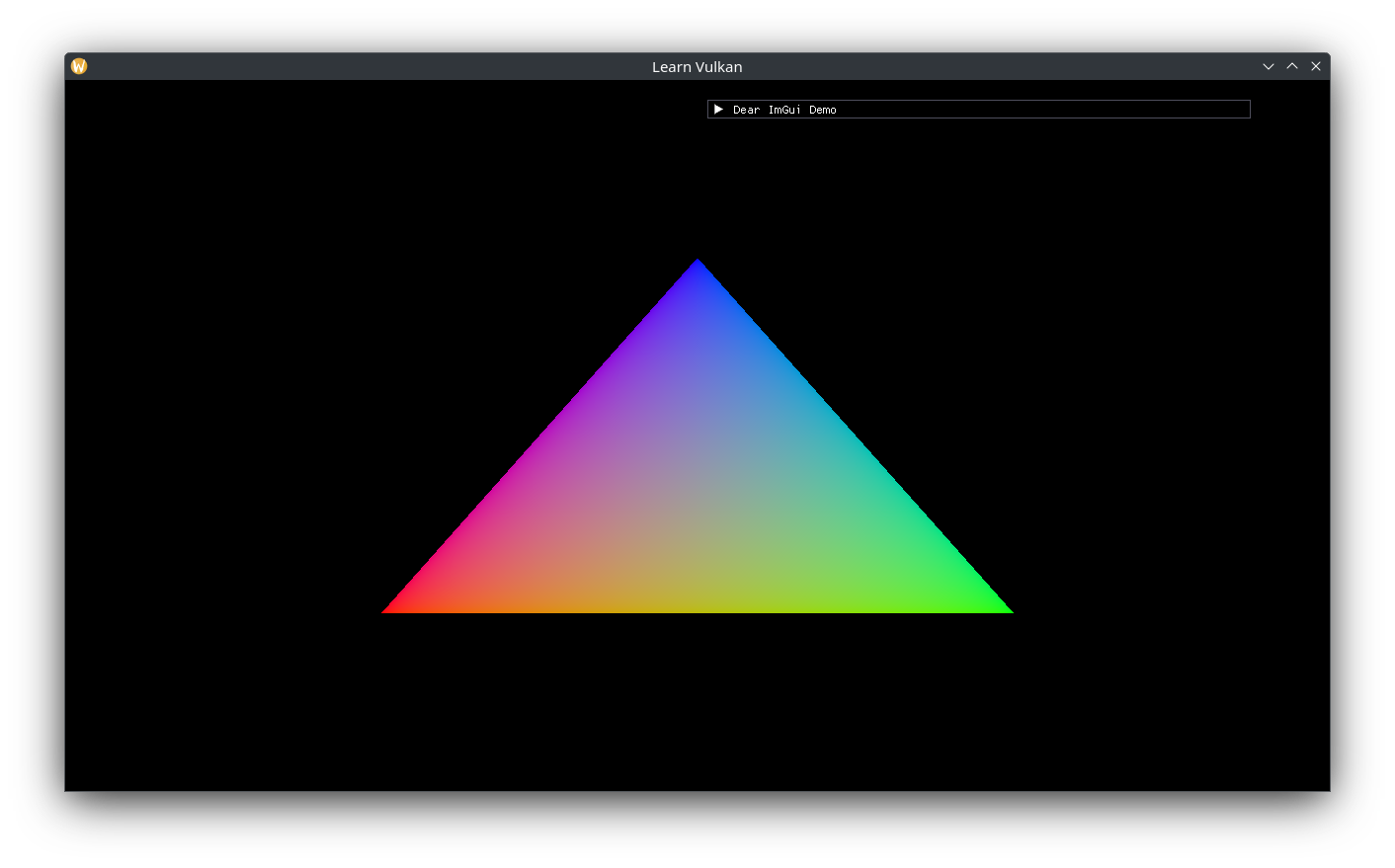

Updating the shaders to use interpolated RGB on each vertex:

// shader.vert

layout (location = 0) out vec3 out_color;

// ...

const vec3 colors[] = {

vec3(1.0, 0.0, 0.0),

vec3(0.0, 1.0, 0.0),

vec3(0.0, 0.0, 1.0),

};

// ...

out_color = colors[gl_VertexIndex];

// shader.frag

layout (location = 0) in vec3 in_color;

// ...

out_color = vec4(in_color, 1.0);

Make sure to recompile both the SPIR-V shaders in assets/.

And a black clear color:

// ...

.setClearValue(vk::ClearColorValue{0.0f, 0.0f, 0.0f, 1.0f});

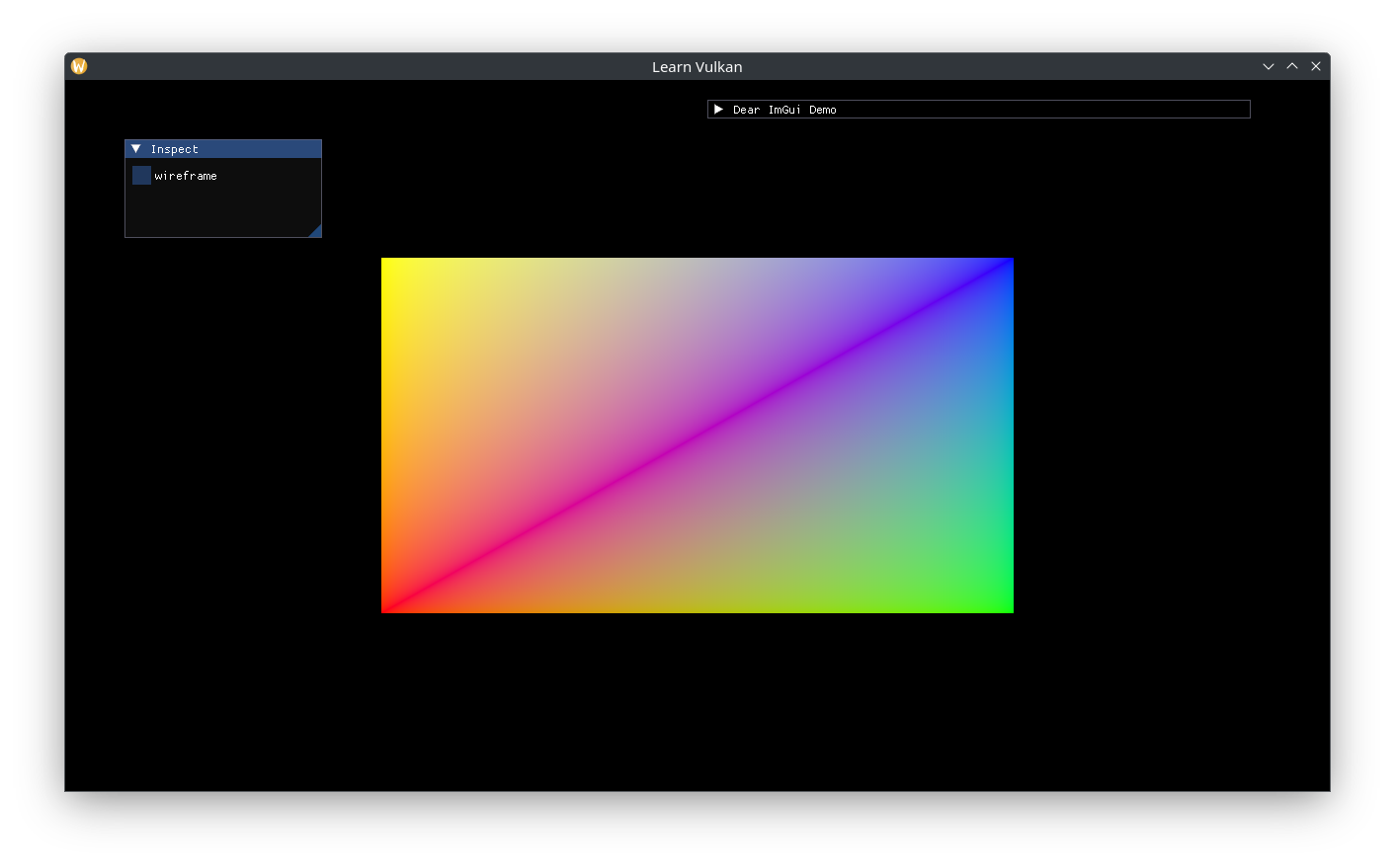

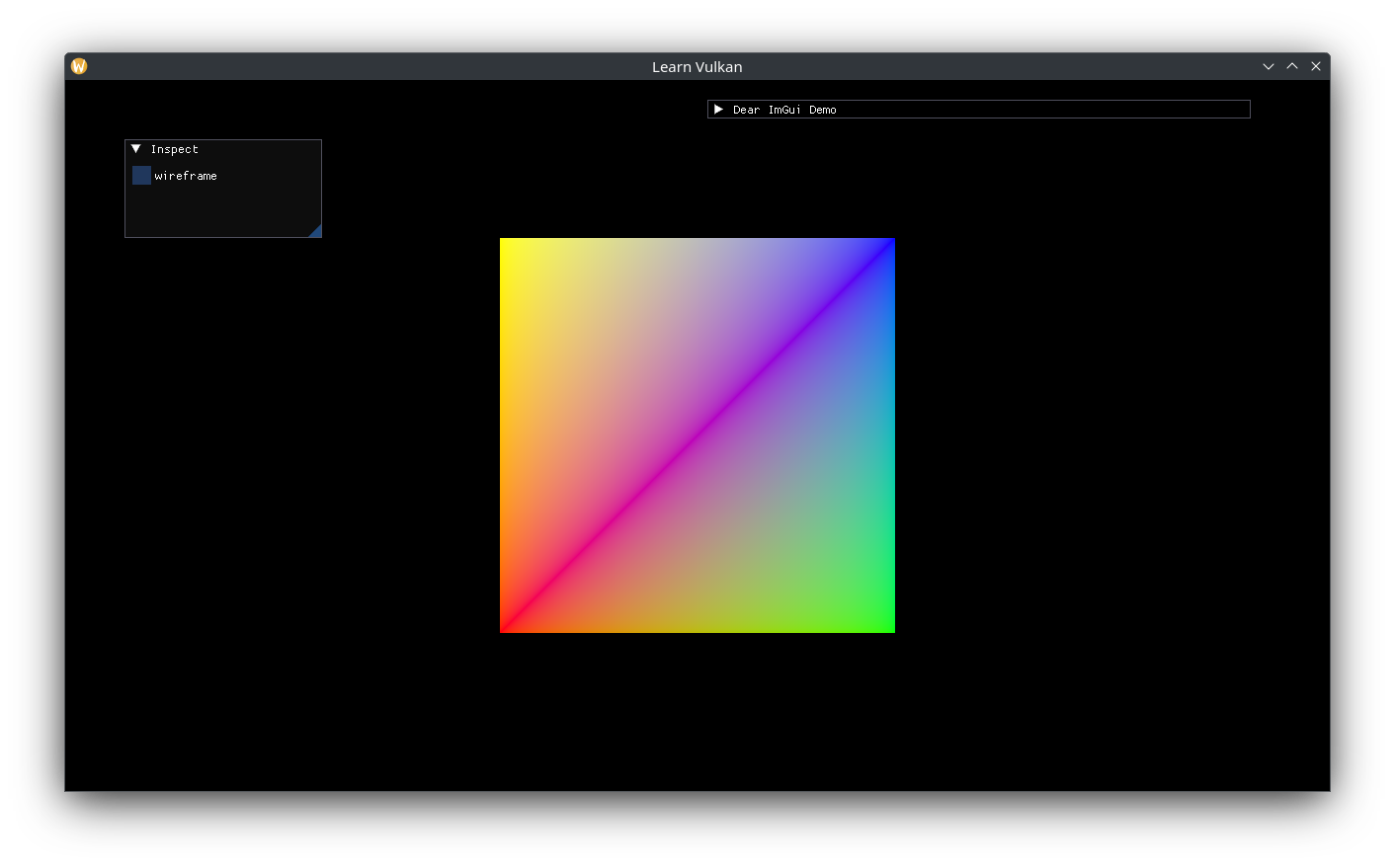

Gives us the renowned Vulkan sRGB triangle:

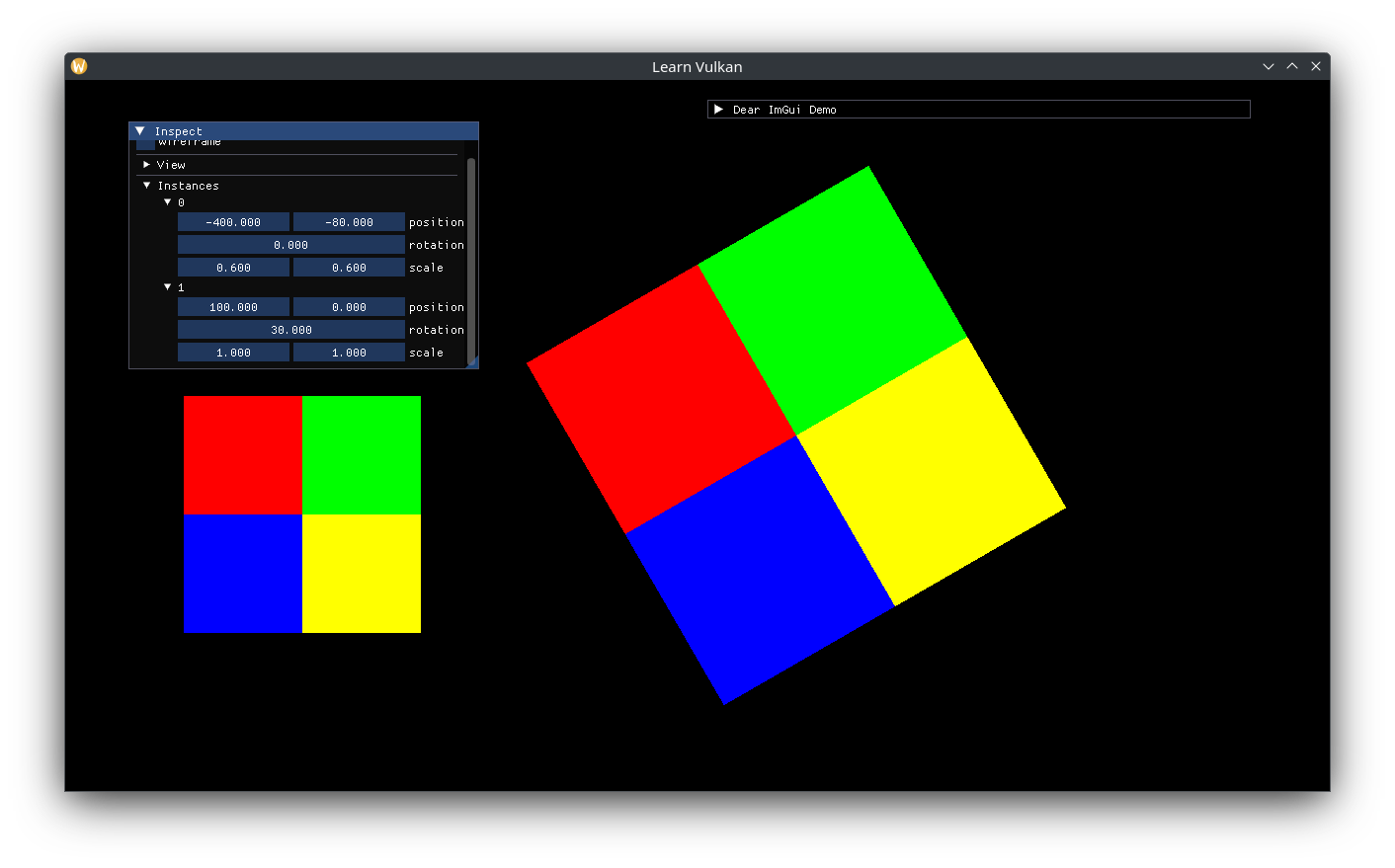

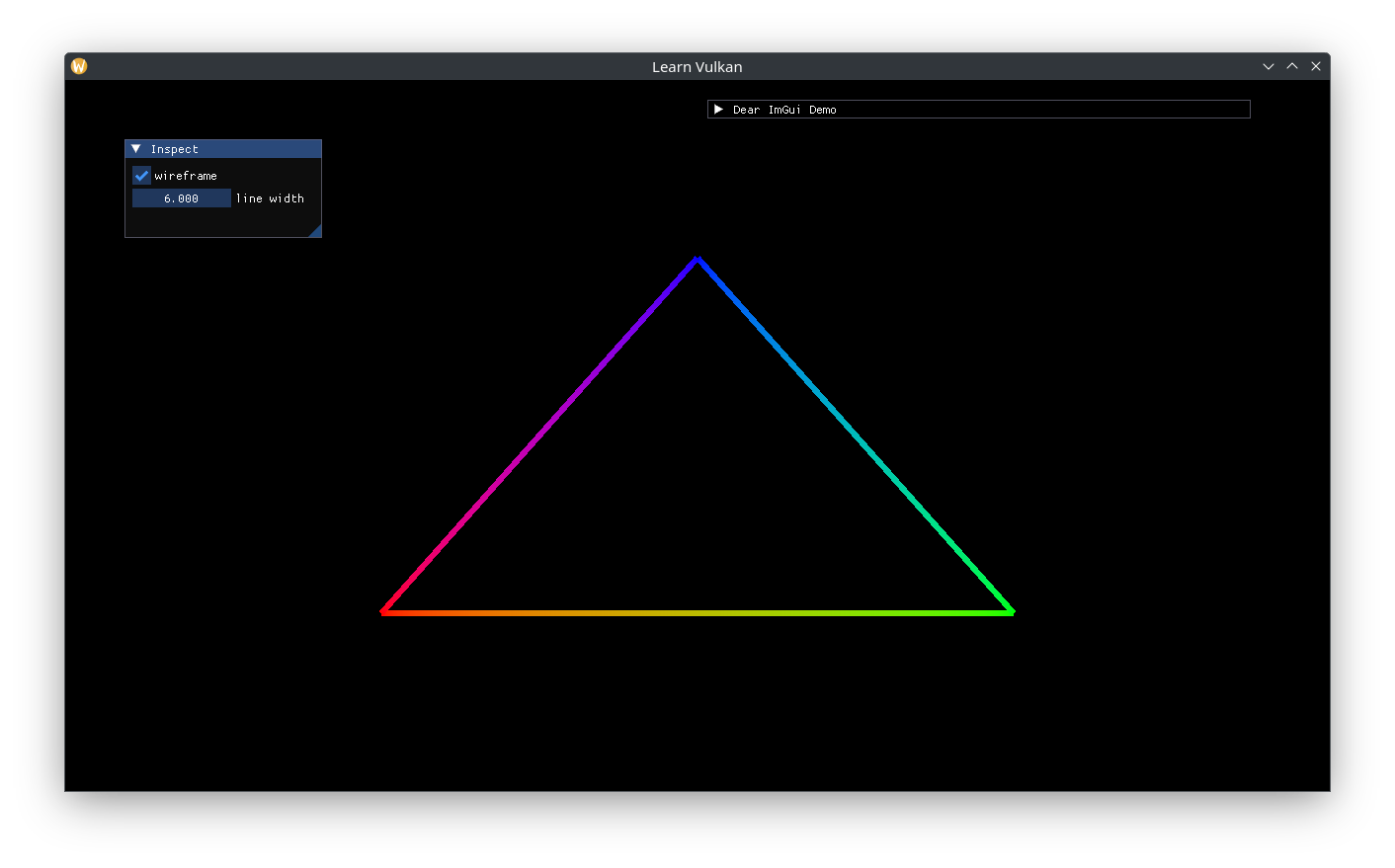

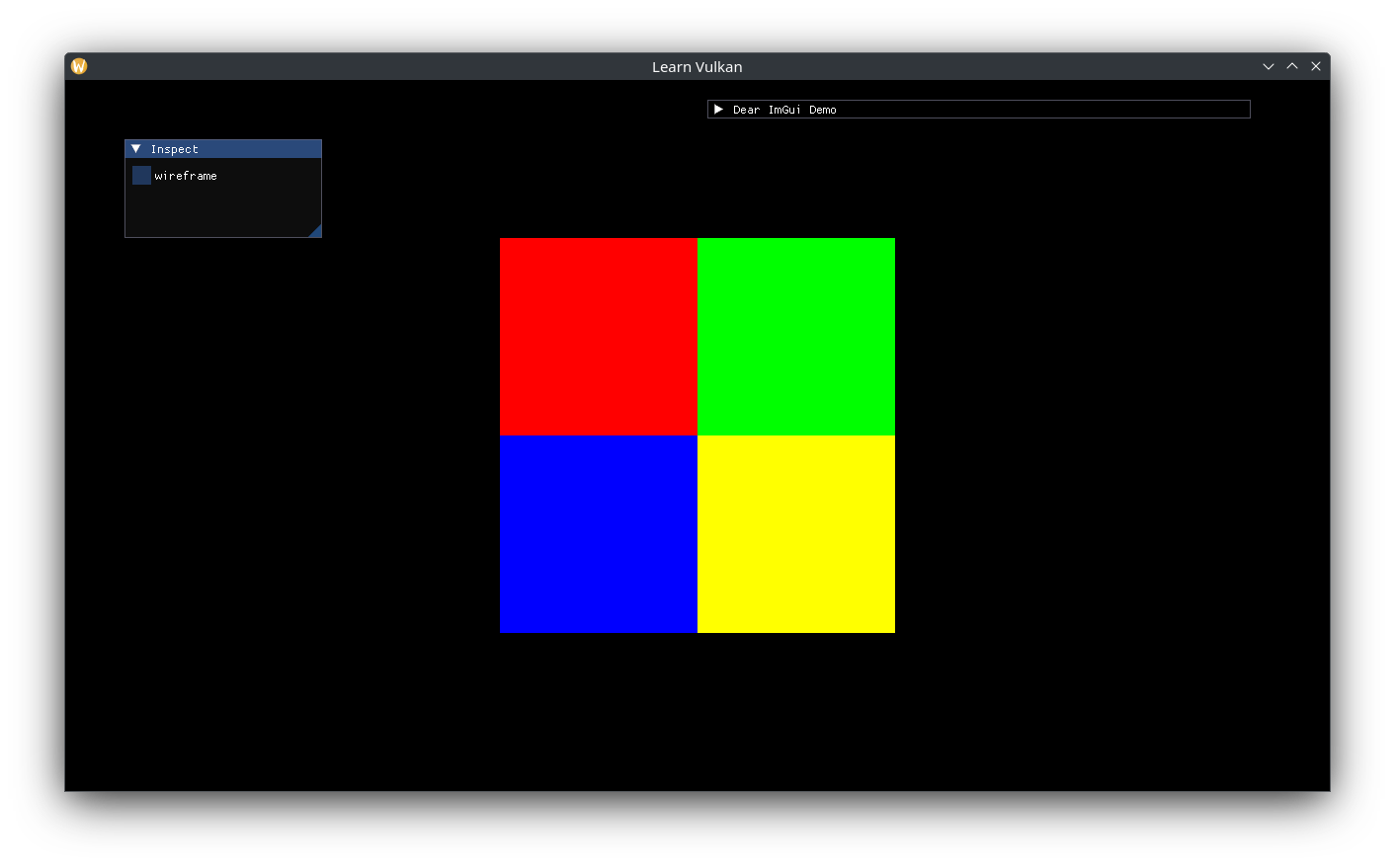

Modifying Dynamic State

We can use an ImGui window to inspect / tweak some pipeline state:

ImGui::SetNextWindowSize({200.0f, 100.0f}, ImGuiCond_Once);

if (ImGui::Begin("Inspect")) {

if (ImGui::Checkbox("wireframe", &m_wireframe)) {

m_shader->polygon_mode =

m_wireframe ? vk::PolygonMode::eLine : vk::PolygonMode::eFill;

}

if (m_wireframe) {

auto const& line_width_range =

m_gpu.properties.limits.lineWidthRange;